Imagine being able to process vast amounts of data in real-time, effortlessly and efficiently. That’s exactly what Google Cloud Platform’s Pub/Sub offers – a game-changing solution for streamlining data processing. With GCP Pub/Sub, event streaming at scale becomes a breeze, allowing organizations to handle massive data streams without compromising on performance. In this article, we will take a closer look at how GCP Pub/Sub empowers businesses to make the most out of their data, revolutionizing the way they handle and analyze information. From its seamless integration with other GCP services to its robust scalability, Pub/Sub is set to reshape the future of data processing.

1. Introduction to GCP Pub/Sub

Event streaming is an essential component of modern data processing pipelines. It allows businesses to handle large volumes of data in real-time, enabling timely insights and faster decision-making. One powerful and scalable event streaming solution provided by Google Cloud Platform (GCP) is Pub/Sub. In this article, we will explore the features and benefits of GCP Pub/Sub and delve into the process of setting up, publishing messages, subscribing to topics, managing topics and subscriptions, integrating with data processing pipelines, and monitoring and error handling.

1.1 What is GCP Pub/Sub?

GCP Pub/Sub is a globally distributed messaging service that enables developers to send and receive messages between independent applications. It follows the publish-subscribe pattern, where applications publish messages to topics, and other applications subscribe to those topics to receive the messages. This decoupling of senders and receivers allows for seamless integration and scalability, making Pub/Sub an ideal choice for handling event-driven workloads.

1.2 Key Features and Benefits

GCP Pub/Sub offers several key features and benefits that make it a reliable and efficient event streaming solution:

-

Scalability and Reliability: Pub/Sub is designed to handle massive workloads and automatically scales based on the incoming message volume. It ensures reliability by replicating messages across multiple zones and providing at-least-once delivery guarantees.

-

Real-time and Asynchronous Messaging: With Pub/Sub, messages can be sent and received in real-time, enabling instant data processing and analysis. The asynchronous nature of Pub/Sub allows applications to operate independently, maximizing efficiency and decoupling components.

-

Global Availability: Pub/Sub is a globally distributed service, ensuring low-latency message delivery across regions. This feature is crucial for businesses with a global presence, as it enables real-time data synchronization and event handling.

-

Ease of Use and Integration: Pub/Sub provides easy-to-use APIs, client libraries, and SDKs for various programming languages, simplifying integration with existing applications and systems. It seamlessly integrates with other GCP services, such as Cloud Functions and Dataflow, to create powerful data pipelines.

By leveraging these features, businesses can streamline their data processing workflows, handle high volumes of events, and build scalable and reliable applications.

2. Setting up GCP Pub/Sub

Before diving into the functionalities of GCP Pub/Sub, we need to set up a GCP project and enable the Pub/Sub API. Creating topics and subscriptions will also be essential to start publishing and subscribing to messages.

2.1 Creating a GCP Project

To begin with, we need to create a GCP project. This project will serve as the container for resources such as Pub/Sub topics and subscriptions. Within the GCP console, we can create a project, assign a unique name and project ID, and configure any necessary billing details associated with the project.

2.2 Enabling Pub/Sub API

After setting up the GCP project, we need to enable the Pub/Sub API. The API enables communication with the Pub/Sub service and provides the necessary functionalities for working with topics and subscriptions. Within the GCP console, we can navigate to the API Library, search for “Pub/Sub API,” and enable it for the project.

2.3 Creating Pub/Sub Topics and Subscriptions

With the project and Pub/Sub API enabled, we can now create topics and subscriptions. Topics serve as channels for publishing messages, while subscriptions allow applications to receive those messages. We can create a topic by specifying a unique name within the GCP console. Similarly, we can create subscriptions for topics and configure parameters such as acknowledgment deadline and message filtering.

By following these steps, we can set up Pub/Sub in our GCP project and start utilizing its powerful event streaming capabilities.

3. Publishing Messages to Pub/Sub

Now that we have Pub/Sub set up, it’s time to explore how we can publish messages to topics. Understanding publisher clients, sending messages, and message delivery guarantees are crucial aspects of utilizing Pub/Sub effectively.

3.1 Understanding Publisher Clients

Publisher clients are applications or services responsible for sending messages to Pub/Sub topics. They can be developed using various programming languages and utilize Pub/Sub client libraries or APIs for interacting with the Pub/Sub service. These clients authenticate with Pub/Sub using service account credentials, ensuring secure and authorized message publishing.

3.2 Sending Messages to a Topic

To send messages to a Pub/Sub topic, the publisher client needs to specify the topic’s name and the content of the message. The message content can be any structured data, such as JSON or serialized objects. Upon publishing, Pub/Sub assigns a unique message ID to each message, enabling reliable tracking and acknowledgment.

3.3 Message Delivery Guarantees

Pub/Sub provides strong message delivery guarantees to ensure that messages reach their intended subscribers. It follows an at-least-once delivery semantics, which means that messages are guaranteed to be delivered to subscribers at least once. In scenarios where message delivery fails or acknowledgments aren’t received, Pub/Sub retries the delivery process. However, it’s important for subscribers to handle duplicate messages gracefully to avoid any unintended consequences.

With the understanding of publisher clients, message sending, and delivery guarantees, we can confidently start publishing messages to Pub/Sub and feed our event-driven applications or data processing pipelines.

4. Subscribing to Pub/Sub Topics

Subscribing to topics is a crucial step in receiving messages and processing them in our applications. Configuring subscriber clients, receiving messages, and filtering messages with labels are essential aspects of working with Pub/Sub subscriptions.

4.1 Configuring Subscriber Clients

Subscriber clients are responsible for subscribing to Pub/Sub topics and receiving messages. These clients can be developed using various programming languages and utilize Pub/Sub client libraries or APIs for interaction. Similar to publisher clients, subscriber clients authenticate with Pub/Sub using service account credentials, ensuring secure and authorized message consumption.

4.2 Receiving Messages from Subscriptions

To receive messages from a Pub/Sub subscription, the subscriber client needs to specify the subscription’s name and efficiently handle incoming messages. Pub/Sub ensures that messages are delivered to subscribers on a best-effort basis and in the order they were published. Upon receiving a message, subscribers can process the message content based on their application logic, perform any necessary actions, and acknowledge the message to mark it as processed.

4.3 Filtering Messages with Labels

Pub/Sub allows subscribers to filter messages using labels. Labels are key-value pairs associated with messages, and subscribers can define filtering rules based on these labels. By doing so, subscribers only receive messages that match the defined rules, making message processing more efficient and targeted.

By correctly configuring subscriber clients, receiving messages, and utilizing message filtering, we can effectively subscribe to Pub/Sub topics and handle incoming messages in our applications.

5. Managing Pub/Sub Topics and Subscriptions

Managing topics and subscriptions is an essential part of utilizing GCP Pub/Sub effectively. Tasks such as deleting topics and subscriptions, setting retention policies, and configuring acknowledgment deadlines help maintain a clean and efficiently running Pub/Sub environment.

5.1 Deleting Topics and Subscriptions

Over time, topics and subscriptions that are no longer needed may accumulate in our Pub/Sub environment. It’s important to regularly review and delete those unnecessary resources to optimize resource allocation and reduce costs. Pub/Sub provides APIs and command-line tools to programmatically delete topics and subscriptions, making the management process efficient and streamlined.

5.2 Setting Retention Policies

In some cases, we might need to retain messages within Pub/Sub topics and subscriptions for a specific duration. Pub/Sub allows us to set retention policies to define how long messages are retained. This feature is particularly useful when dealing with compliance or auditing requirements, as it ensures that messages are available for a specified duration before being automatically discarded.

5.3 Configuring Acknowledgment Deadline

When subscribers receive messages from subscriptions, they need to acknowledge those messages within a certain timeframe. This timeframe is set using the acknowledgment deadline, and if a message isn’t acknowledged within that period, Pub/Sub assumes that the message delivery failed and retries the delivery process. Configuring an appropriate acknowledgment deadline is crucial to ensure that messages are processed in a timely manner and avoid unnecessary retries.

By actively managing Pub/Sub topics and subscriptions, setting retention policies, and configuring acknowledgment deadlines, we can enhance the overall efficiency and reliability of our event streaming workflow.

6. Integrating Pub/Sub with Data Processing Pipelines

One of the powerful aspects of GCP Pub/Sub is its seamless integration with various data processing pipelines and services. Let’s explore some key integration scenarios, including processing real-time data, connecting Pub/Sub with Cloud Functions, and integrating with Dataflow.

6.1 Processing Real-time Data

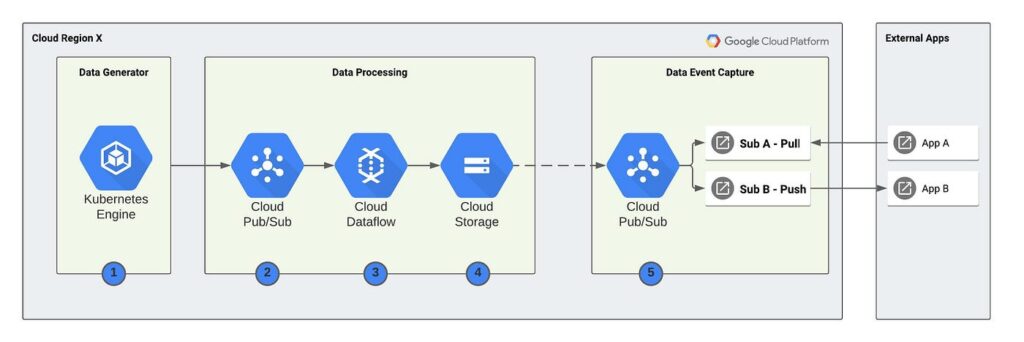

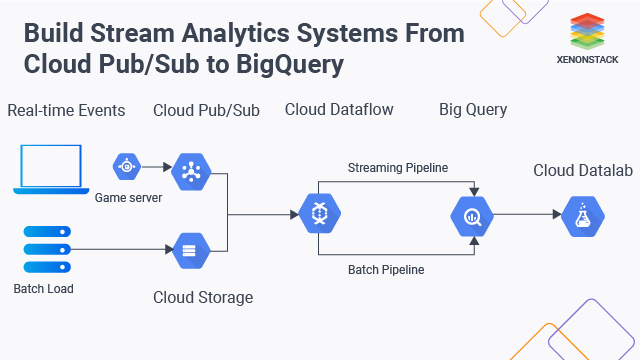

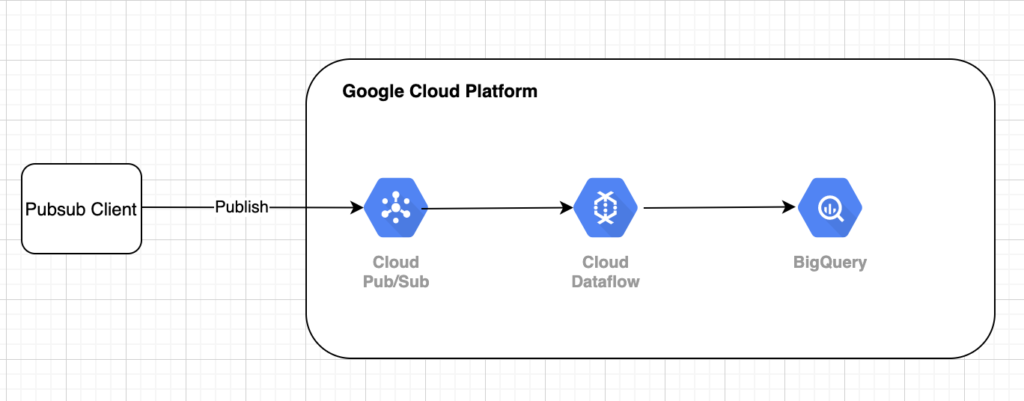

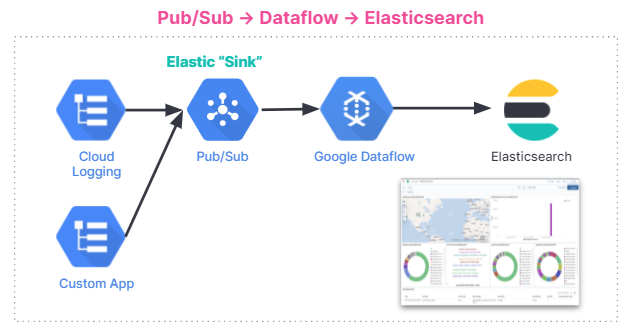

Pub/Sub’s real-time messaging capability makes it an ideal choice for processing data as it arrives. By combining Pub/Sub with services like Cloud Pub/Sub, Cloud Dataflow, or other streaming frameworks, we can build real-time data processing pipelines capable of handling continuous data streams. These pipelines enable us to derive insights, trigger actions, or update dashboards as events occur, ensuring timely decision-making.

6.2 Connecting Pub/Sub with Cloud Functions

Cloud Functions provide serverless compute capabilities that can be triggered by Pub/Sub messages, helping automate workflows or perform specific tasks based on events. By connecting Pub/Sub with Cloud Functions, we can easily build event-driven architectures, where tasks such as image processing, data transformation, or third-party integrations are triggered in response to incoming messages. This integration greatly simplifies the development and deployment of event-driven microservices.

6.3 Pub/Sub and Dataflow Integration

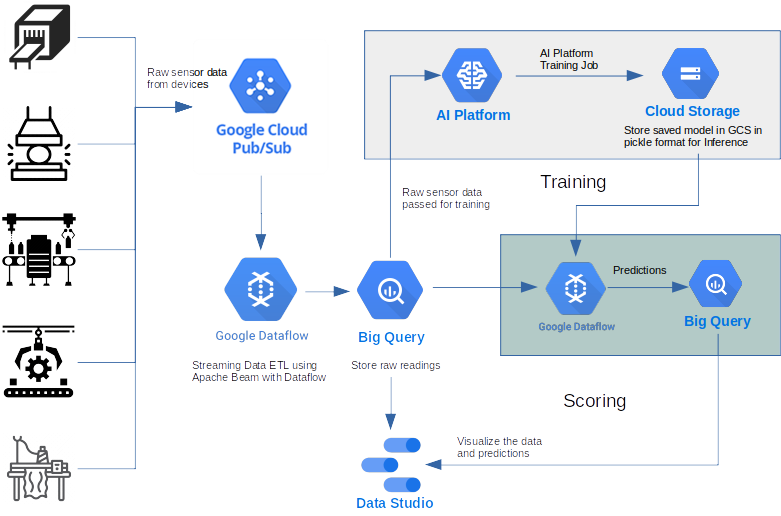

Dataflow is a serverless, fully managed platform for executing data processing workflows. By integrating Pub/Sub with Dataflow, we can process large volumes of data in real-time or batch mode, apply complex transformations, and perform aggregations or analytics. The seamless integration allows Pub/Sub to act as a data source or sink for Dataflow pipelines, enabling scalable and efficient data processing.

By leveraging the integration capabilities of Pub/Sub with other GCP services, we can build sophisticated data processing pipelines that handle real-time data, trigger actions, and perform complex transformations efficiently.

7. Monitoring and Error Handling in Pub/Sub

Monitoring the message flow, latency, and handling errors is crucial to ensure the reliability and performance of our Pub/Sub workflows. Let’s explore some key aspects of monitoring Pub/Sub message flow, handling errors, and its integration with Stackdriver.

7.1 Monitoring Message Flow and Latency

Pub/Sub provides monitoring features that allow us to track the flow of messages, monitor message delivery latency, and gain insights into the health of our event streaming workflows. We can utilize metrics and logs provided by Pub/Sub to analyze message rates, identify bottlenecks, and optimize the overall system performance. Monitoring message flow and latency is essential to ensure the timely delivery and efficient processing of messages.

7.2 Handling Errors and Dead Letter Queues

While Pub/Sub ensures reliable message delivery, errors can occasionally occur during the processing of messages. It’s important to have mechanisms in place to handle such errors gracefully and prevent data loss. Pub/Sub provides the concept of dead letter queues, where messages that can’t be delivered or processed successfully are sent to a separate queue for manual inspection or error analysis. By effectively handling errors and utilizing dead letter queues, we can ensure the resilience and fault tolerance of our event streaming workflows.

7.3 Pub/Sub’s Integration with Stackdriver

Pub/Sub seamlessly integrates with Stackdriver, Google Cloud’s monitoring and observability platform. Stackdriver provides a comprehensive set of tools for monitoring and troubleshooting Pub/Sub, including real-time dashboards, alerts, and log analysis. By leveraging Stackdriver’s capabilities, we can gain deep insights into the behavior of our Pub/Sub workflows, detect and resolve potential issues, and ensure the optimal performance of our event streaming environment.

By actively monitoring message flow, handling errors, and utilizing the integration with Stackdriver, we can maintain a robust and reliable Pub/Sub infrastructure for our data processing needs.

8. Scaling and Performance Considerations

As data volumes and processing requirements grow, it’s essential to understand the scalability and performance considerations of Pub/Sub. Let’s explore some key aspects, including Pub/Sub’s scalability and throughput, optimizing message delivery, and load testing and performance tuning.

8.1 Pub/Sub’s Scalability and Throughput

Pub/Sub is designed to handle massive workloads and scales automatically based on the incoming message volume. It provides horizontal scalability, allowing the system to handle high message rates, bursts, or spikes in traffic seamlessly. By horizontally scaling Pub/Sub, we can ensure that our event streaming workflows can handle current and future processing requirements.

8.2 Optimizing Message Delivery

To optimize message delivery, we can make use of Pub/Sub’s scalability features, such as increasing the number of subscriptions or configuring the streaming pull mechanism. By appropriately sizing our subscriptions and pull configurations, we can ensure efficient message retrieval and processing. Additionally, using message bundling techniques, such as batching or compression, can further optimize the delivery of messages and reduce network overhead.

8.3 Load Testing and Performance Tuning

To ensure that our Pub/Sub workflows can handle the expected workload, it’s crucial to perform load testing and performance tuning. Load testing helps determine the limits of our system, identify potential bottlenecks, and validate its scalability. Performance tuning involves fine-tuning various parameters such as subscription configurations, message processing pipelines, or resource allocation to optimize the overall system performance. By employing load testing and performance tuning techniques, we can maximize the efficiencies and throughput of our Pub/Sub environment.

By considering scalability, optimizing message delivery, and performing load testing and performance tuning, we can build a highly efficient and scalable event streaming infrastructure using Pub/Sub.

9. Security and Access Control in Pub/Sub

Security is of paramount importance when working with sensitive data and event streams. Let’s explore some key considerations in securing Pub/Sub, including authentication and authorization, IAM roles and permissions, and encrypting messages in transit and at rest.

9.1 Authentication and Authorization

Pub/Sub provides various mechanisms to authenticate and authorize access to its resources. It supports Google Cloud’s Identity and Access Management (IAM), allowing us to define fine-grained access control policies for topics and subscriptions. We can use service account credentials to authenticate applications or services interacting with Pub/Sub, ensuring secure access and preventing unauthorized access to event streams.

9.2 IAM Roles and Permissions

IAM roles and permissions in Pub/Sub provide granular control over access to topics and subscriptions. We can assign roles such as “Pub/Sub Administrator” or “Pub/Sub Publisher” to control administrative actions and message publishing capabilities. Additionally, we can configure read-only roles like “Pub/Sub Viewer” to allow limited access for monitoring or auditing purposes. By carefully assigning IAM roles and permissions, we can ensure that only authorized personnel can publish or subscribe to event streams.

9.3 Encrypting Messages in Transit and at Rest

To protect data confidentiality, Pub/Sub allows us to enable encryption for messages both in transit and at rest. In transit, we can leverage Google’s infrastructure security by default, ensuring that messages are encrypted during transmission. At rest, we can enable Cloud KMS (Key Management Service) to encrypt messages stored within Pub/Sub topics or subscriptions. By enabling end-to-end encryption, we can prevent unauthorized access to event data and comply with various security and compliance requirements.

By implementing robust authentication and authorization mechanisms, defining appropriate IAM roles and permissions, and enabling encryption, we can ensure the security and integrity of our event streaming workflows using Pub/Sub.

10. Use Cases and Success Stories

GCP Pub/Sub finds application in various use cases, enabling businesses to streamline their data processing and event-driven workloads. Let’s explore some prominent use cases and success stories of Pub/Sub.

10.1 Real-time Analytics and Stream Processing

Pub/Sub’s ability to handle real-time data streams makes it a powerful tool for real-time analytics and stream processing. Businesses can ingest high volumes of continuously arriving data, process it in real-time, and generate insights for immediate action. Use cases such as fraud detection, recommendation engines, or anomaly detection benefit from Pub/Sub’s real-time messaging and scalability, allowing businesses to make timely decisions based on up-to-date information.

10.2 Internet of Things (IoT) Data Ingestion

With the rise of the Internet of Things (IoT), the ability to handle large volumes of data from connected devices becomes crucial. Pub/Sub’s scalability and global availability make it an ideal choice for ingesting IoT data at scale. Businesses can easily collect sensor data, telemetry information, or device events from IoT devices globally and process it in real-time or batch mode. Pub/Sub’s integration with other GCP services such as Dataflow or BigQuery enables further analytics and insights on IoT data.

10.3 Event-driven Microservices Architecture

Event-driven microservices architectures often require reliable and scalable event streaming solutions. Pub/Sub serves as a backbone for such architectures, enabling independent microservices to communicate asynchronously without tightly coupling their activities. With Pub/Sub, microservices can exchange events, trigger actions, and maintain high scalability and fault tolerance. The decoupling of components provided by Pub/Sub simplifies development and enables agile, scalable, and event-driven microservices architectures.

These use cases and success stories demonstrate the versatility and power of Pub/Sub in handling various event-driven workloads, providing businesses with a comprehensive and reliable event streaming solution.

In conclusion, GCP Pub/Sub is a robust and scalable event streaming service that enables businesses to handle high volumes of data in real-time. By following a step-by-step process of setting up Pub/Sub, publishing and subscribing to messages, managing topics and subscriptions, integrating with data processing pipelines, monitoring and error handling, considering scalability and performance, ensuring security and access control, and exploring various use cases, businesses can leverage Pub/Sub’s capabilities to streamline their data processing workflows and drive actionable insights. With its ease of use, global availability, and seamless integration with other GCP services, Pub/Sub offers a powerful and scalable solution for event streaming at scale.