Imagine a world where data processing is efficient, seamless, and hassle-free. A world where businesses can effortlessly handle large volumes of data without the need for complex infrastructure or extensive programming. Well, thanks to Google Cloud Platform’s (GCP) Dataflow, this world is not just a figment of our imagination. In this article, we will explore how GCP Dataflow is revolutionizing the way we process data, allowing organizations to make faster, more informed decisions by leveraging the power of stream processing. From real-time analytics to batch processing, GCP Dataflow is the answer to all businesses’ data processing needs. So, let’s dive in and discover the magic behind GCP Dataflow.

Overview of GCP Dataflow

What is GCP Dataflow?

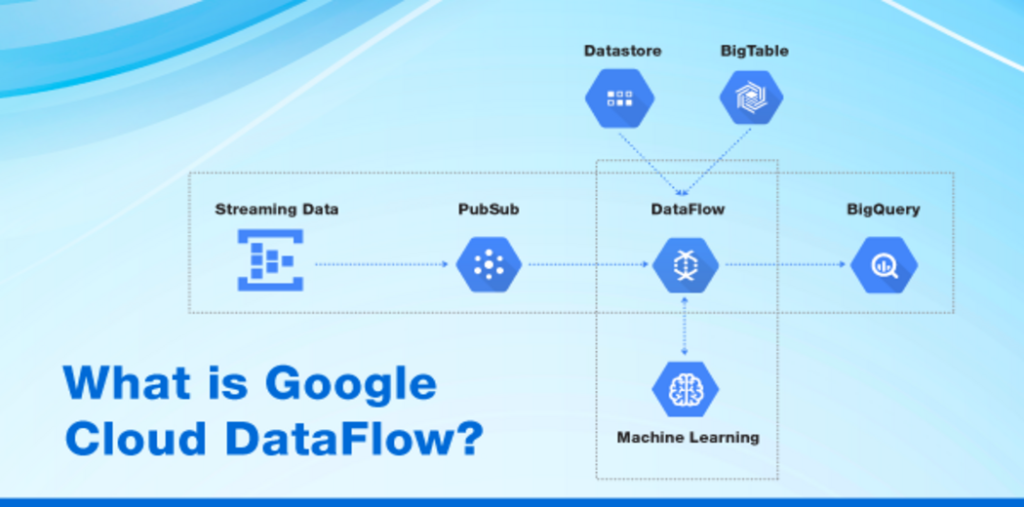

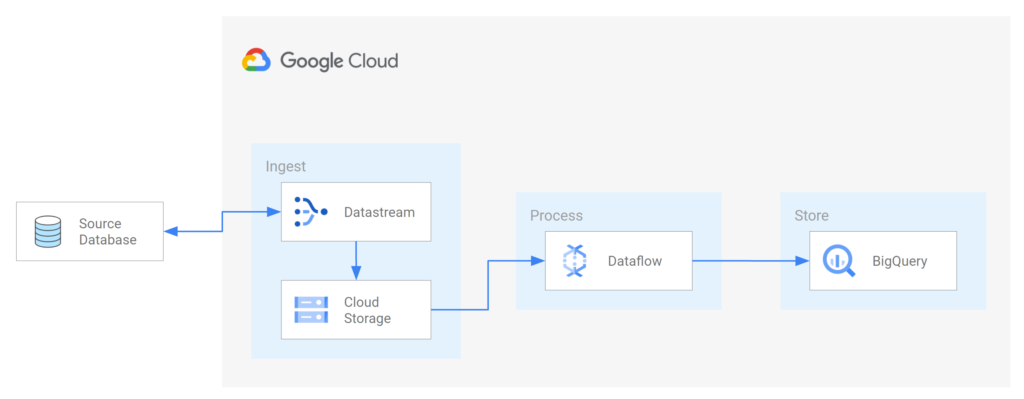

GCP Dataflow is a fully managed service on the Google Cloud Platform (GCP) that allows for the streamlined processing of large-scale data in a distributed and parallel manner. It provides a serverless and autoscaling environment for data processing tasks, allowing users to focus on building data pipelines rather than managing infrastructure.

How does GCP Dataflow work?

GCP Dataflow follows a model of parallel processing, where data is processed in parallel across multiple machines or worker nodes. It leverages the Apache Beam SDK for defining data processing pipelines, making it easy to express complex data transformations. Dataflow pipelines consist of a series of transformations that are chained together, each operating on a collection of data elements. These transformations can be applied to both batch and streaming data.

Benefits of using GCP Dataflow

Using GCP Dataflow offers several benefits for data processing tasks. Firstly, it provides a fully managed environment, which means that infrastructure management and scaling are taken care of automatically. This allows for simplified development and maintenance of data processing pipelines. Additionally, Dataflow offers fault tolerance and automatic recovery, ensuring that processing jobs continue uninterrupted even in the presence of failures. The autoscaling capabilities of Dataflow enable efficient resource utilization and cost optimization. Overall, GCP Dataflow provides a powerful and scalable solution for processing large datasets efficiently.

Setting Up GCP Dataflow

Creating a Dataflow job

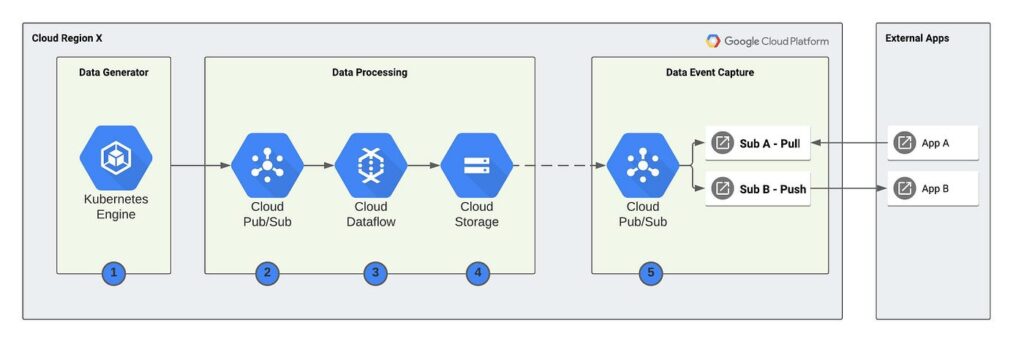

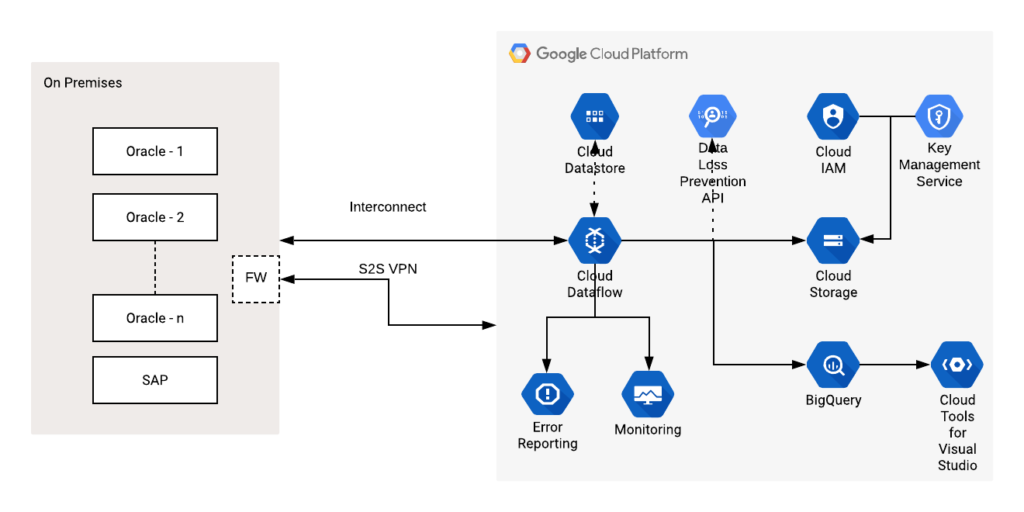

To set up GCP Dataflow, the first step is to create a Dataflow job. This involves defining the input and output sources for the data pipeline. GCP Dataflow supports a wide range of data sources, including messaging systems like Pub/Sub, storage systems like Google Cloud Storage, and databases like BigQuery. Choosing the appropriate data sources depends on the specific requirements of the data processing task.

Choosing appropriate data sources

When setting up a Dataflow job, it is essential to choose the right data sources that align with the requirements of the pipeline. For example, if the pipeline needs to process real-time data streams, integrating with Pub/Sub or other messaging systems would be suitable. On the other hand, if the task involves batch processing of data stored in a cloud storage system, integrating with Google Cloud Storage would be a better choice. Dataflow supports various data sources, and selecting the most appropriate one ensures efficient processing of data.

Configuring Dataflow pipelines

Configuring Dataflow pipelines involves defining the data transformations and operations that need to be performed on the input data. The Apache Beam SDK provides a rich set of transformations for common data processing operations such as filtering, aggregating, and joining data. These transformations can be chained together to create complex data processing workflows. Additionally, Dataflow supports windowing, which allows processing data within specific time or size windows. Configuring the pipeline involves defining these transformations and configuring any required parameters to achieve the desired data processing goals.

Dataflow Transformations and Operations

Understanding Dataflow transformations

Dataflow transformations are the building blocks of data processing pipelines. They define how data is manipulated and transformed as it flows through the pipeline. Transformations can range from simple operations like filtering or mapping data to more complex tasks like grouping and aggregating data. The Apache Beam SDK provides a rich set of transformations that can be applied to datasets of any size or type. Understanding the available transformations and their usage is essential for designing efficient data processing pipelines.

Applying transformations to data streams

One of the key advantages of GCP Dataflow is its ability to handle both batch and streaming data. Applying transformations to streaming data involves processing data as it arrives in real-time. This enables organizations to perform real-time analytics and decision-making based on up-to-date information. Transformations on streaming data can be applied in a windowed manner, where data is processed within specific time or size windows. This allows for efficient handling of time-based aggregations and analytics on streaming data.

Working with windowing

Windowing in GCP Dataflow allows for dividing data streams into logical segments of time or size to perform computations. Windowing is particularly useful when dealing with streaming data and allows for processing data within a specified time frame or fixed number of elements. Windowing enables efficient aggregation, grouping, and analysis on streaming data in a way that aligns with business requirements. Understanding and correctly implementing windowing strategies can significantly enhance the effectiveness of data processing pipelines.

Understanding Dataflow operations

Dataflow operations define how the transformed data is processed and distributed across the worker nodes in the processing cluster. These operations include tasks like shuffling data between workers, grouping data based on keys, and performing aggregations. It is essential to understand how Dataflow operations work and their impact on the performance and scalability of the processing job. By optimizing and tuning these operations, it is possible to achieve better utilization of resources and improve the overall performance of the data processing pipeline.

Data aggregations and groupings

Dataflow allows for efficient aggregations and groupings of data streams, both in batch and streaming mode. Aggregations involve summarizing data based on specific criteria, such as calculating the average, sum, or count of values. Groupings, on the other hand, involve organizing data based on common attributes or keys. By performing aggregations and groupings, valuable insights can be derived from the data, enabling organizations to make data-driven decisions. Properly configuring and utilizing data aggregations and groupings can lead to more efficient data processing and improved analysis capabilities.

Monitoring and Diagnosing Dataflow Jobs

Monitoring job progress

Monitoring the progress of a Dataflow job is crucial to ensure that the pipeline is running smoothly and efficiently. GCP Dataflow provides a web-based user interface called the Dataflow Monitoring Console that allows you to track the progress of your jobs. It provides real-time information about the job’s state, processing metrics, and job logs. By monitoring the job progress, you can identify any bottlenecks, errors, or performance issues and take appropriate actions to resolve them.

Viewing job metrics and logs

GCP Dataflow provides extensive metrics and logs for each job, allowing for in-depth analysis and troubleshooting. These metrics include information about data processing throughput, latency, and resource utilization. Logs provide detailed information about the execution of the job, including any errors or warnings encountered. By analyzing these metrics and logs, you can gain insights into the performance and efficiency of your data processing pipelines and identify any areas for improvement.

Diagnosing and troubleshooting issues

In the event of any issues or errors during the execution of a Dataflow job, it is crucial to diagnose and troubleshoot the problem promptly. GCP Dataflow offers various tools and capabilities to aid in this process. The Monitoring Console provides detailed information about the job’s state and execution history, which can help identify the root cause of the issue. Additionally, the logs and error messages can provide valuable insights into what went wrong. By leveraging these diagnostic tools, you can quickly resolve any issues and ensure the smooth and efficient execution of your data processing pipelines.

Handling Fault Tolerance and Scaling

Ensuring fault tolerance in Dataflow jobs

Fault tolerance is a critical aspect of data processing, especially when dealing with large datasets and complex pipelines. GCP Dataflow provides built-in mechanisms for ensuring fault tolerance in processing jobs. It automatically handles failures and recovers from errors, ensuring that processing continues uninterrupted. Dataflow achieves fault tolerance through mechanisms such as data replication, checkpointing, and recovery. By leveraging these mechanisms, you can ensure that your data processing pipelines are resilient and able to recover from failures.

Auto-scaling Dataflow workers

Auto-scaling is a powerful feature of GCP Dataflow that allows for dynamically adjusting the number of worker nodes based on the workload. It automatically scales up or down the processing cluster based on the volume of data to be processed and the desired throughput. This ensures efficient utilization of resources and reduces the cost of running data processing jobs. By enabling auto-scaling, you can automatically adapt to changes in data volume and processing requirements, ensuring optimal performance and cost-effectiveness.

Optimizing job performance

Optimizing the performance of a Dataflow job involves fine-tuning various aspects of the pipeline to achieve optimal resource utilization and processing speed. This can include optimizing the choice of data sources, configuring appropriate parallelism, tuning window sizes, and optimizing data shuffling and grouping operations. By optimizing these factors, you can reduce processing time, improve resource utilization, and achieve better overall performance. Continuous monitoring and analysis of job metrics and logs can help identify areas for optimization and fine-tuning.

Integrating GCP Dataflow with Other Services

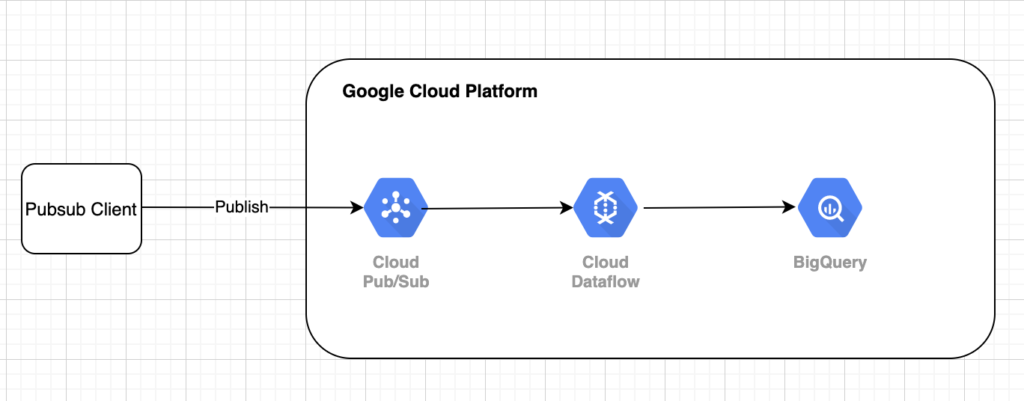

Integration with BigQuery

GCP Dataflow seamlessly integrates with BigQuery, Google’s fully managed and scalable data warehouse. This integration allows for easy transfer and processing of data between Dataflow pipelines and BigQuery tables. Dataflow can write processed data directly into BigQuery, enabling real-time analytics and reporting on the transformed data. Conversely, Dataflow can read data from BigQuery as an input source for processing. This integration provides a powerful combination for performing advanced analytics and processing large datasets efficiently.

Integration with Google Cloud Storage

Google Cloud Storage is a scalable and durable cloud storage system, and Dataflow can seamlessly integrate with it. Dataflow can read data from and write data to Cloud Storage as part of the processing pipeline. This integration enables efficient processing of massive datasets stored in Cloud Storage, eliminating the need for manual data transfers. By integrating with Cloud Storage, Dataflow provides a scalable and cost-effective solution for processing data stored in the cloud.

Integration with Pub/Sub

GCP Dataflow integrates seamlessly with Pub/Sub, Google’s messaging system for real-time event streaming. Dataflow can consume data from Pub/Sub topics, allowing for real-time processing and analytics. This integration is well-suited for scenarios where timely processing of streaming data is essential, such as real-time fraud detection or monitoring systems. By integrating with Pub/Sub, Dataflow enables organizations to build robust and scalable data processing pipelines for real-time data streams.

Dataflow Flex Templates

What are Dataflow Flex Templates?

Dataflow Flex Templates are a powerful feature that allows for easy creation and deployment of Dataflow jobs. Flex Templates are pre-built, reusable templates that encapsulate the Dataflow job’s logic, configuration, and dependencies. They can be created and customized to fit specific data processing requirements and then deployed at scale. Flex Templates simplify the process of creating and managing Dataflow jobs, making it easier to develop and deploy data processing pipelines.

Creating and deploying Flex Templates

To create a Dataflow Flex Template, you start by defining the job’s logic and configuration using the Apache Beam SDK and Dataflow API. Once the template is created and tested, it can be packaged into a container image and uploaded to a container registry. The Flex Template can then be deployed by specifying the container image and providing input parameters for the job. This enables easy deployment and execution of Dataflow jobs across different environments and scales.

Migration Strategies to GCP Dataflow

Considerations for migrating from other data processing systems

Migrating from other data processing systems to GCP Dataflow requires careful planning and consideration. Before making the move, it is important to assess the existing system’s architecture, capabilities, and limitations. Understanding the specific requirements and challenges of the migration will help in formulating a migration strategy and identifying potential roadblocks. It is also important to evaluate the compatibility of the existing data processing logic and workflows with the Dataflow model. Considerations like data formats, dependencies, and integration points need to be taken into account to ensure a smooth and successful migration.

Migration steps and best practices

A successful migration to GCP Dataflow involves following a systematic approach and adhering to best practices. The migration process typically includes steps such as analyzing the existing system, designing the Dataflow pipeline, implementing the pipeline logic, migrating the data, and testing and validating the migrated solution. Throughout the process, it is crucial to closely monitor and validate the accuracy and quality of the migrated data. Adopting best practices like incremental migration, leveraging parallelization capabilities, and using version control can greatly facilitate the migration process and minimize risks.

Use Cases for GCP Dataflow

Real-time data processing

GCP Dataflow is well-suited for real-time data processing use cases, where data needs to be processed and analyzed as it arrives. It enables organizations to perform real-time analytics, anomaly detection, and event-based processing on streaming data. By leveraging the windowing capabilities of Dataflow, real-time aggregations and insights can be derived from continuous data streams. Use cases like fraud detection, sensor data processing, and real-time monitoring can benefit from the scalability and fault tolerance features of Dataflow.

Batch data processing

Batch data processing is another common use case for GCP Dataflow. It enables the processing of large volumes of data in batch mode, making it ideal for scenarios where data is collected over a specific time period and needs to be processed in bulk. Batch processing use cases include data preparation, data cleansing, data enrichment, and data transformation tasks. Dataflow’s parallel processing capabilities and autoscaling feature make it efficient and cost-effective for processing large datasets.

ETL (Extract, Transform, Load) pipelines

GCP Dataflow is an excellent choice for building ETL pipelines, where data needs to be extracted from multiple sources, transformed or cleansed, and loaded into a target destination. Dataflow provides the necessary tools and capabilities for handling complex data transformations, data quality checks, and integration with various data sources and sinks. ETL use cases can include data integration, data migration, data synchronization, and data consolidation tasks. Dataflow’s scalability, fault tolerance, and integration capabilities make it well-suited for ETL processes.

Cost Optimization and Pricing

Understanding Dataflow pricing model

GCP Dataflow pricing is based on a pay-as-you-go model, where you are billed based on the actual resources consumed during the execution of your jobs. The pricing is determined by factors such as the number of worker instances, the duration of job execution, and the amount of data processed. Dataflow provides cost estimators and pricing calculators that can help you estimate the cost of running your jobs. Understanding the pricing model and optimizing the resource allocation and job configuration can help minimize costs and ensure efficient resource utilization.

Recommendations for cost optimization

To optimize costs when using GCP Dataflow, there are several strategies and best practices to consider. Firstly, right-sizing the resources based on the workload can help ensure efficient resource utilization and minimize costs. Understanding the data processing requirements and fine-tuning parameters like parallelism, window sizes, and resource allocation can help achieve optimal performance and cost-effectiveness. Additionally, monitoring and analyzing job metrics and logs can help identify any inefficiencies or bottlenecks that can be optimized to reduce costs. Finally, regularly reviewing and optimizing the Dataflow job configurations and pipeline logic can help eliminate any unnecessary processing steps or redundant operations, further reducing costs.