If you’ve ever found yourself drowning in a sea of data, desperately in need of an efficient solution for storing and retrieving it, then look no further than GCP Cloud Storage. This powerful tool offers a seamless and user-friendly experience for managing all your data storage needs. Whether you’re a small business owner or a seasoned IT professional, GCP Cloud Storage provides the scalability and flexibility you require, all while ensuring the highest level of security for your information. Say goodbye to the days of lost files and overwhelmed servers, and say hello to a new era of efficient data management with GCP Cloud Storage.

Overview of GCP Cloud Storage

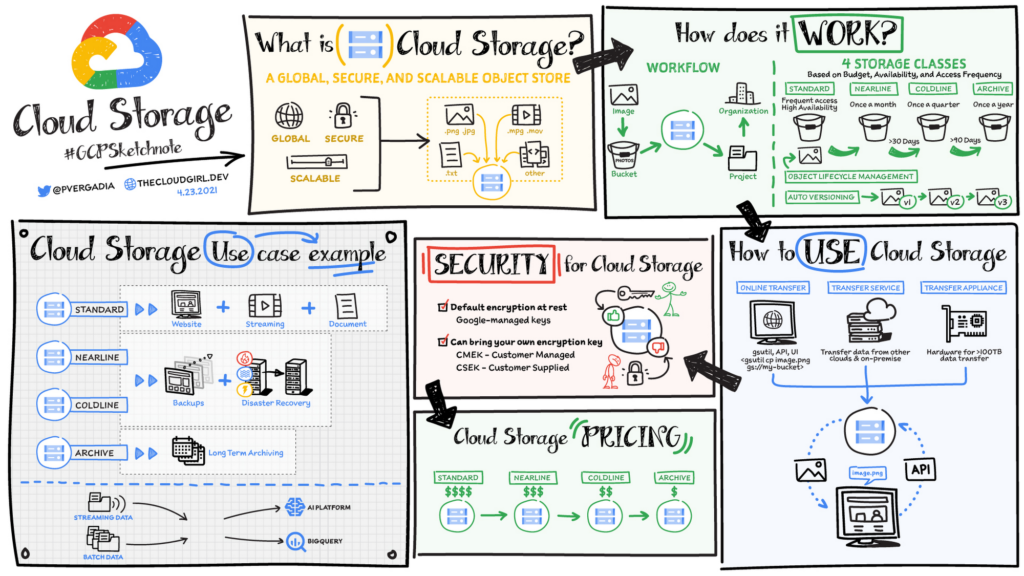

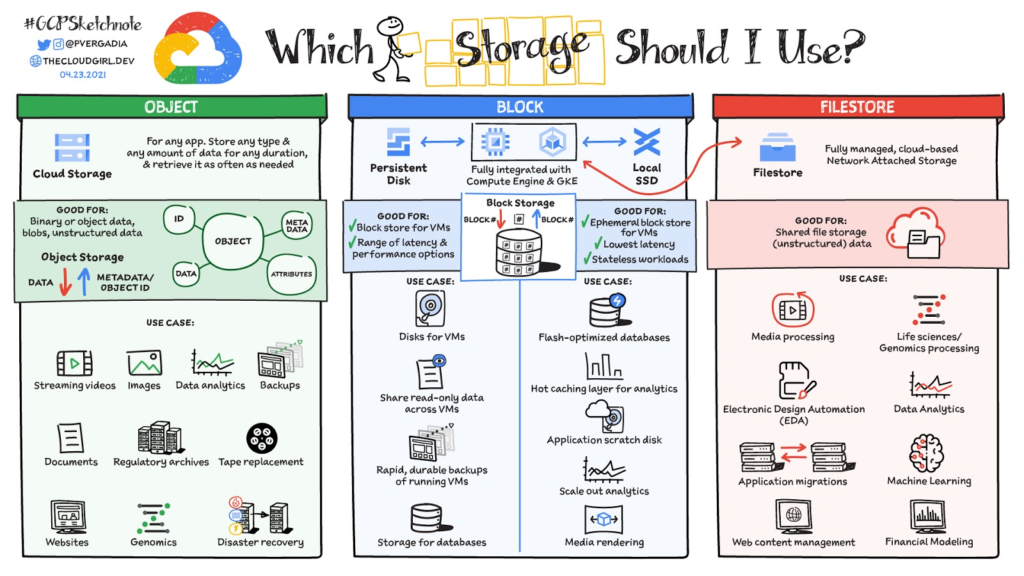

GCP Cloud Storage is a powerful and scalable object storage service provided by Google Cloud Platform. It allows users to store and retrieve data in the cloud with ease and efficiency. With GCP Cloud Storage, you can securely store and manage your data, ranging from small files to large datasets. It offers a highly available and durable storage infrastructure, ensuring that your data is safe and accessible at all times.

What is GCP Cloud Storage?

GCP Cloud Storage is a fully managed object storage service designed to store and retrieve any amount of data from any location. It provides a simple and secure way to store and manage your data without worrying about infrastructure management. GCP Cloud Storage is highly scalable, allowing you to seamlessly handle growing volumes of data. It offers multiple storage classes to cater to different performance and cost requirements, making it a flexible solution for various use cases.

Key features of GCP Cloud Storage

GCP Cloud Storage offers several features that make it a reliable and efficient storage solution:

-

Global Availability: GCP Cloud Storage is globally available, enabling you to store your data in different geographical locations. This ensures low latency access to your data from anywhere in the world.

-

Scalability: It allows you to scale your storage infrastructure effortlessly as your data needs grow. You can increase or decrease the storage capacity as required, without any disruptions to your applications.

-

Durability: GCP Cloud Storage provides high durability for your data by storing multiple copies across different locations. This ensures that your data remains intact even in the event of hardware failures or data center outages.

-

Security: GCP Cloud Storage offers advanced security features to protect your data. It provides access controls and IAM (Identity and Access Management) policies to manage fine-grained access permissions. Additionally, data encryption capabilities ensure that your data is always stored securely.

-

Integration: GCP Cloud Storage integrates seamlessly with other Google Cloud services, allowing you to leverage a wide range of functionalities. It provides integration with services like BigQuery, Pub/Sub, and AI Platform, enabling you to build powerful data pipelines and workflows.

Creating a GCP Cloud Storage Bucket

Accessing the GCP Management Console

To create a GCP Cloud Storage bucket, you first need to access the GCP Management Console. Open your web browser and navigate to the Google Cloud Platform homepage. Click on the “Console” button at the top right corner of the page. This will take you to the GCP Management Console, where you can manage all your GCP resources.

Creating a new project

In the GCP Management Console, navigate to the project selection dropdown at the top of the page. Click on the dropdown and select the option to create a new project. Enter a name for your project and click on the “Create” button. This will create a new project in GCP, which will serve as the container for your GCP Cloud Storage bucket.

Enabling the Cloud Storage API

Before you can create a GCP Cloud Storage bucket, you need to enable the Cloud Storage API for your project. In the GCP Management Console, navigate to the “APIs & Services” section. Click on the “Library” tab and search for “Cloud Storage API”. Click on the API from the search results and enable it for your project.

Creating a new bucket

Once the Cloud Storage API is enabled, you can proceed with creating a new GCP Cloud Storage bucket. In the GCP Management Console, navigate to the “Cloud Storage” section. Click on the “Create Bucket” button to initiate the bucket creation process. Provide a unique name for your bucket, select the desired location and storage class, and configure any additional settings as needed. Finally, click on the “Create” button to create the GCP Cloud Storage bucket.

Upload and Manage Data in GCP Cloud Storage

Uploading data to a bucket

Once you have created a GCP Cloud Storage bucket, you can start uploading data to it. There are multiple ways to upload data to a bucket:

-

Using the GCP Management Console: In the Cloud Storage section of the GCP Management Console, navigate to your bucket and click on the “Upload Files” button. Select the files you want to upload and click on the “Open” button. This will initiate the upload process, and your files will be transferred to the cloud storage bucket.

-

Using the gsutil command-line tool: gsutil is a command-line tool provided by Google Cloud SDK for interacting with GCP Cloud Storage. You can use the gsutil command to upload files to a bucket. Open a terminal or command prompt and navigate to the location of the files you want to upload. Use the following command to upload the files:

gsutil cp [FILE_PATH] gs://[BUCKET_NAME]Replace [FILE_PATH] with the path to the files on your local machine and [BUCKET_NAME] with the name of your GCP Cloud Storage bucket.

-

Using client libraries: Google Cloud provides client libraries for various programming languages, including Java, Python, Go, and more. You can use these client libraries to integrate GCP Cloud Storage into your applications and programmatically upload data to a bucket.

Managing objects in a bucket

Once data is uploaded to a GCP Cloud Storage bucket, you can manage the objects stored within it. Some common operations for managing objects in a bucket include:

-

Listing objects: You can retrieve a list of objects stored in a bucket using the GCP management console, gsutil command-line tool, or the Cloud Storage client libraries.

-

Downloading objects: You can download objects from a bucket using the GCP management console, gsutil command-line tool, or the Cloud Storage client libraries. Simply navigate to the object in the management console, use the gsutil cp command, or use the appropriate method in the client libraries to download the object.

-

Deleting objects: If you no longer need an object, you can delete it from the bucket. In the GCP management console, navigate to the object and click on the delete button. You can also use the gsutil rm command or the appropriate method in the client libraries to delete objects programmatically.

Setting access permissions for objects

GCP Cloud Storage allows you to control access permissions for the objects stored in your buckets. You can set access control lists (ACLs) or use IAM policies to manage permissions. ACLs allow you to grant read, write, or owner access to specific users or groups. IAM policies offer more fine-grained control over permissions and allow you to define access based on roles and conditions.

To set access permissions using the GCP management console, navigate to the object in your bucket. Click on the “Edit permissions” button and add the desired users or groups with the appropriate access levels. You can also use the gsutil acl command or the appropriate method in the Cloud Storage client libraries to set access permissions programmatically.

Retrieving Data from GCP Cloud Storage

Using Google Cloud SDK

Google Cloud SDK provides a set of command-line tools and libraries for interacting with Google Cloud Platform services, including GCP Cloud Storage. To retrieve data from GCP Cloud Storage using the Google Cloud SDK, you can use the gsutil command-line tool. Use the following command to copy a file from a GCP Cloud Storage bucket to your local machine:

gsutil cp gs://[BUCKET_NAME]/[OBJECT_NAME] [LOCAL_PATH] Replace [BUCKET_NAME] with the name of the GCP Cloud Storage bucket, [OBJECT_NAME] with the name of the object you want to retrieve, and [LOCAL_PATH] with the desired path on your local machine to save the retrieved file.

Using Google Cloud Storage JSON API

The Google Cloud Storage JSON API provides a RESTful interface for accessing and manipulating GCP Cloud Storage resources. You can use the API to retrieve data from GCP Cloud Storage programmatically. The API supports various HTTP methods, including GET requests to retrieve objects from a bucket.

To retrieve an object using the Google Cloud Storage JSON API, you need to send a GET request to the appropriate endpoint. The request should include the necessary headers and parameters, such as the bucket name and object name. Upon receiving the request, the API will return the requested object as a response.

Using client libraries

Google Cloud provides client libraries for popular programming languages, such as Java, Python, Go, and more. These client libraries offer a convenient way to retrieve data from GCP Cloud Storage programmatically. They provide language-specific APIs and abstractions for interacting with GCP Cloud Storage, simplifying the retrieval process.

To retrieve data using a client library, you need to import the appropriate library into your project. Then, you can use the provided methods and classes to retrieve objects from a GCP Cloud Storage bucket. The client libraries handle the necessary authentication and communication with the GCP Cloud Storage service, allowing you to focus on writing the retrieval logic.

Leveraging GCP Cloud Storage Features

Object lifecycle management

GCP Cloud Storage offers object lifecycle management, which allows you to define rules to automatically manage the lifecycle of your objects. You can set up lifecycle policies to automatically transition objects to a different storage class, delete objects after a certain period, or perform other actions based on predefined conditions.

By leveraging object lifecycle management, you can optimize costs and ensure that your data meets the required retention and access requirements. For example, you can configure lifecycle policies to automatically move infrequently accessed objects to a lower-cost storage class or delete obsolete objects after a specific time.

Data encryption

Data encryption is a crucial aspect of securing your data stored in GCP Cloud Storage. GCP Cloud Storage provides server-side encryption by default, ensuring that your data is encrypted at rest. It uses Google-managed keys to encrypt your objects, providing strong security for your data.

Additionally, GCP Cloud Storage supports customer-supplied encryption keys (CSEK), which allow you to provide your own encryption keys for added control over the encryption process. With CSEK, you can manage the encryption keys and ensure that only authorized parties can access your data.

Object versioning

GCP Cloud Storage supports object versioning, which allows you to keep multiple versions of an object in a bucket. With object versioning, you can retain previous versions of an object even if it is overwritten or deleted. This provides an extra layer of data protection and allows for easy recovery in case of accidental modifications or deletions.

Object versioning is particularly useful in scenarios where you need to maintain a history of changes made to your objects. It enables you to track and restore previous versions, ensuring data integrity and providing a safety net against unwanted modifications.

Monitoring GCP Cloud Storage

Configuring Cloud Storage metrics

GCP Cloud Storage offers various metrics that provide insights into the performance and usage of your storage buckets. These metrics can help you monitor the health and performance of your storage infrastructure and make informed decisions on resource allocation.

To configure Cloud Storage metrics, navigate to the “Monitoring” section in the GCP Management Console. Click on “Metrics Explorer” and select “Cloud Storage” as the resource type. Choose the desired metric to monitor, such as total bytes stored or number of requests, and configure any additional filters or conditions. Finally, click on the “Create” button to create the metric.

Setting up alerts and notifications

In addition to monitoring metrics, GCP Cloud Storage allows you to set up alerts and notifications based on specific conditions. Alerts can help you monitor critical thresholds and take timely action to prevent issues or optimize resource utilization.

To set up alerts in GCP Cloud Storage, navigate to the “Alerting” section in the GCP Management Console. Click on “Create Policy” and configure the conditions for triggering the alert. You can set thresholds for metrics, specify conditions based on resource utilization, or define custom conditions using logs-based metrics. Once the conditions are defined, set up the desired actions, such as sending notifications via email or triggering a Pub/Sub event.

Managing Costs and Billing

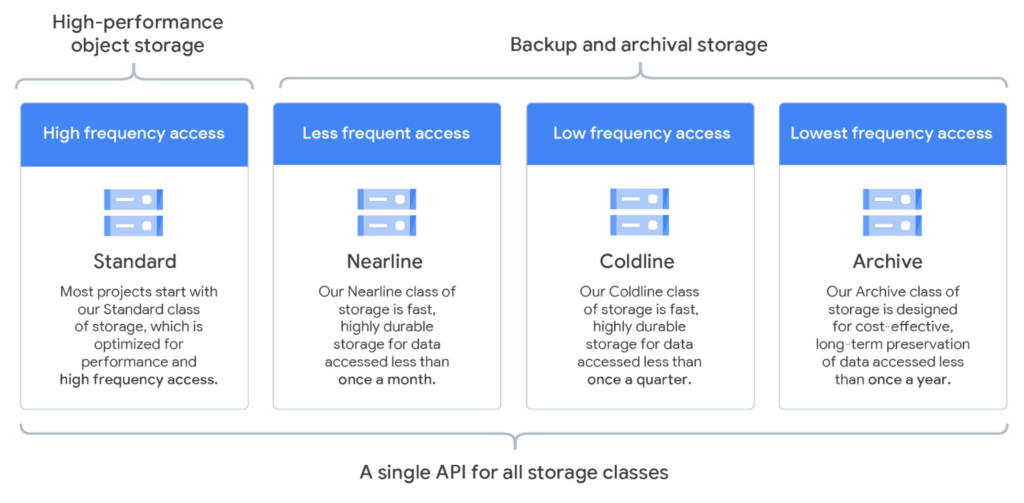

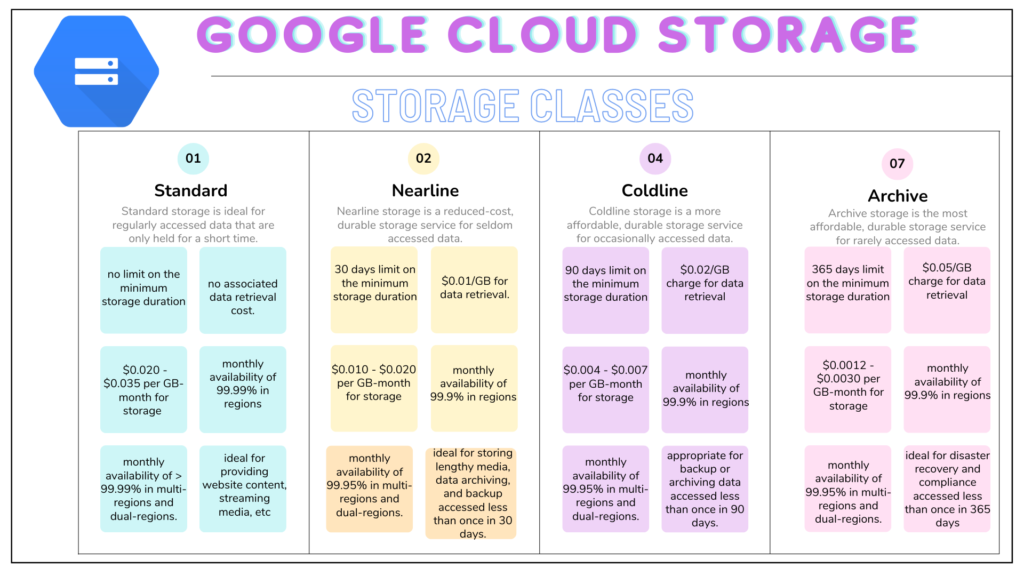

Understanding storage classes

GCP Cloud Storage offers different storage classes to meet various performance and cost requirements. Each storage class has its unique characteristics and pricing model. Understanding the available storage classes can help you make informed decisions when choosing the appropriate class for your data.

The storage classes offered by GCP Cloud Storage include:

-

Standard: This is the default storage class, offering high-performance and low-latency access to data. It is suitable for frequently accessed data or applications requiring low latency.

-

Nearline: Nearline storage provides a cost-effective option for storing data that is accessed less frequently but still requires fast access. It has a slightly higher latency compared to the standard storage class.

-

Coldline: Coldline storage is designed for long-term data archival and backup. It offers the lowest storage cost but has higher latency for accessing the data.

-

Archive: Archive storage is ideal for infrequently accessed data that requires long-term retention. It offers the lowest storage cost but has high latency and retrieval costs.

By understanding the characteristics of each storage class and analyzing your data access patterns, you can choose the appropriate storage class to optimize costs while meeting your performance requirements.

Calculating storage costs

GCP Cloud Storage pricing is based on several factors, including storage capacity, data transfer, and operations performed on the data. To calculate the storage costs for your GCP Cloud Storage usage, you need to consider these factors.

The pricing for GCP Cloud Storage varies depending on the storage class chosen, the geographical location of the data, and the volume of data stored. It is important to understand the pricing structure and accurately estimate the costs to effectively manage your budget.

Google provides a pricing calculator tool that allows you to estimate the costs based on your storage needs. You can input the storage class, storage duration, data volume, and other details to get an estimate of the monthly costs. Additionally, Google provides detailed pricing documentation that provides information on the cost components and factors affecting pricing.

Managing billing and budgeting

GCP Cloud Storage provides various tools for managing billing and budgeting. You can set up budgets to control your spending and receive timely notifications when the spending exceeds the defined thresholds. Budgets can be set based on different factors, such as project, usage, or resource. This allows you to gain visibility into your storage costs and prevent unexpected billing surprises.

In addition to budgets, GCP Cloud Storage provides detailed billing reports and usage analysis tools. These tools offer insights into your storage usage patterns, helping you optimize costs and identify potential areas for cost reduction. By regularly reviewing your usage and monitoring your spending, you can ensure efficient budget management and maximize the value of your storage investment.

Securing GCP Cloud Storage

Access control and IAM

GCP Cloud Storage provides robust access control measures to secure your data. Access controls allow you to define who can access your data, what actions they can perform, and when they can access it. GCP Cloud Storage supports two main mechanisms for access control: Access Control Lists (ACLs) and IAM (Identity and Access Management).

ACLs allow you to grant read, write, or owner access to specific users or groups. You can define access control rules at the bucket or object level, providing fine-grained control over permissions. IAM, on the other hand, allows you to manage access permissions using roles and policies. IAM offers more flexibility and control over permissions by defining roles with specific permissions and applying them to users or groups.

By carefully configuring access controls and regularly reviewing permissions, you can ensure that only authorized users can access and manipulate your data in GCP Cloud Storage, reducing the risk of unauthorized access or data breaches.

Signed URLs and public access prevention

GCP Cloud Storage allows you to control public access to your data by default. By default, objects in a bucket are private and can only be accessed by users with the necessary permissions. However, there may be situations where you need to provide temporary access to specific objects without granting permanent permissions.

Signed URLs provide a solution in such cases. A signed URL is a time-limited URL that grants temporary access to an object. It contains a digital signature that verifies the authenticity and validity of the URL. Signed URLs can be generated with specific permissions and expiration times, providing fine-grained control over access to your data.

Additionally, GCP Cloud Storage offers features to prevent public access to objects. By enabling the “Uniform bucket-level access” feature, you can enforce private bucket access and prevent inadvertent public access to your objects.

Best Practices for GCP Cloud Storage

Organizing objects and buckets

Organizing objects and buckets in GCP Cloud Storage is crucial for maintaining a well-structured and easily manageable storage infrastructure. By following best practices for organization, you can improve data discoverability, access control management, and overall operational efficiency.

Some best practices for organizing objects and buckets in GCP Cloud Storage include:

-

Naming conventions: Establish a consistent naming convention for objects and buckets to ensure clarity and ease of identification. Use descriptive names that reflect the contents or purpose of the objects.

-

Logical hierarchy: Use a logical hierarchy when organizing objects within buckets. This can be achieved using prefixes in object names, similar to a file system directory structure. Logical organization facilitates efficient data discovery and management.

-

Bucket naming: Choose meaningful names for buckets and avoid generic or ambiguous names. Consider using a logical structure for bucket names to reflect the purpose, project, or team associated with the data.

-

Access management: Implement a comprehensive access control strategy by utilizing IAM roles and policies. Define appropriate access controls at the bucket and object level, ensuring that only authorized users have access to the data.

Choosing appropriate storage class

Choosing the appropriate storage class for your data is essential for balancing performance and cost requirements. Consider the access patterns, frequency of access, and the importance of data when selecting the storage class.

Some considerations when choosing a storage class are:

-

Frequent access: For frequently accessed data or applications requiring low latency, the “Standard” storage class is a suitable choice. It provides high-performance and low-latency access.

-

Infrequent access: If the data is accessed less frequently but still requires fast access when needed, consider the “Nearline” storage class. It offers a balance between cost and performance.

-

Long-term archival: For long-term data archival and backup, the “Coldline” or “Archive” storage class is appropriate. These classes offer lower storage costs but have higher latency.

Consider the requirements and access patterns of your data to choose the most cost-effective storage class while ensuring that performance needs are met.

Optimizing performance and cost

To optimize performance and cost in GCP Cloud Storage, there are several strategies and best practices to consider:

-

Data compression: Compressing data before uploading it to GCP Cloud Storage can reduce storage costs and improve data transfer times. Use compression algorithms suitable for your data type and ensure that your applications can decompress the data when needed.

-

Object chunking: Splitting large objects into smaller chunks can improve performance when reading or writing data. By using object chunking, you can parallelize data transfers and optimize network bandwidth utilization.

-

Request batching: When performing multiple operations on data, such as uploading or downloading multiple objects, consider batching these operations into a single request. Request batching reduces the number of API requests, improving performance and reducing costs.

-

Monitoring and optimization: Regularly monitor your storage usage, performance metrics, and costs. Analyze the data to identify potential bottlenecks, optimize resource allocations, and maximize cost efficiencies. Utilize the tools provided by GCP Cloud Storage, such as monitoring and billing reports, to gain insights into your storage usage.

By implementing these best practices and continuously optimizing your storage infrastructure, you can achieve optimal performance and cost-efficiency in GCP Cloud Storage.

Troubleshooting and Support

Common issues and solutions

While using GCP Cloud Storage, you may encounter common issues that can affect performance, data access, or other aspects of the storage infrastructure. Some common issues and their potential solutions include:

-

Access denied errors: If you encounter access denied errors when accessing objects or performing operations, check the access controls and permissions. Ensure that the necessary IAM roles or ACLs are set up correctly.

-

Slow performance: Slow performance may occur due to various factors, such as network latency or inefficient data retrieval methods. Check the network connectivity and consider optimizing the retrieval methods, such as using appropriate client libraries or adjusting request patterns.

-

Data corruption or loss: Data corruption or loss can be prevented by implementing appropriate backup and versioning strategies. Ensure that you have proper backups in place and consider enabling object versioning to protect against accidental modifications or deletions.

For specific issues, refer to the GCP Cloud Storage documentation or consult the GCP support resources.

Accessing GCP support resources

Google Cloud Platform provides comprehensive support resources to assist users with any issues or questions related to GCP Cloud Storage. These resources include:

-

Documentation: The GCP Cloud Storage documentation provides detailed information and guidance on various features, use cases, and best practices. It covers a wide range of topics, from basic concepts to advanced configuration options.

-

Community and forums: The GCP community forums allow users to engage with the community and seek help from other users or Google experts. Users can ask questions, share knowledge, and contribute to discussions related to GCP Cloud Storage.

-

Support packages: Google Cloud offers different support packages, including Basic, Development, and Production support. These support packages provide varying levels of technical support, including access to Google experts, best practices, and troubleshooting assistance.

By leveraging these support resources, users can obtain timely help and guidance to resolve any issues or challenges they may encounter with GCP Cloud Storage.

In conclusion, GCP Cloud Storage is a highly reliable and scalable object storage service that offers numerous features and capabilities for storing and retrieving data in the cloud. By following best practices, managing costs and security, and leveraging the advanced functionalities of GCP Cloud Storage, users can effectively store, manage, and retrieve data with ease and confidence.