Are you ready to take your container orchestration skills to the next level? Look no further than Azure Kubernetes Service (AKS). With AKS, you can seamlessly deploy, manage, and scale containerized applications using the power and flexibility of Kubernetes. Whether you’re a seasoned pro or just dipping your toes into the world of containerization, this article will serve as your comprehensive guide to getting started with AKS. From setting up your cluster to deploying your first application, we’ve got you covered. So, let’s dive in and unlock the full potential of AKS!

Creating an Azure Kubernetes Service

To begin creating an Azure Kubernetes Service (AKS), you will need an Azure subscription. This subscription will allow you to access and manage all the resources within your AKS cluster. If you don’t already have an Azure subscription, you can easily create one by following the steps outlined on the Azure website.

Once you have your Azure subscription set up, the next step is to create a resource group. A resource group acts as a logical container for all the resources that make up your AKS cluster. This includes things like virtual machines, storage accounts, and networking resources. By grouping these resources together, it becomes easier to manage and organize them.

After creating a resource group, you can proceed to create your AKS cluster. An AKS cluster is a managed container orchestration service provided by Azure. It simplifies the deployment, management, and scaling of containerized applications using Kubernetes. When creating your AKS cluster, you will need to specify details such as the number of nodes, the VM size, and the Kubernetes version. Azure will handle the provisioning and management of the underlying infrastructure, while you can focus on deploying and managing your applications.

Managing an AKS Cluster

Once your AKS cluster is up and running, you will have a range of options for managing its resources. One of the key aspects of managing an AKS cluster is scaling. Scaling allows you to adjust the number of nodes in your cluster based on the workload requirements. You can scale your AKS cluster horizontally by adding or removing nodes, or vertically by adjusting the size of the nodes.

Upgrading your AKS cluster to a new Kubernetes version is also an important aspect of cluster management. Azure provides seamless upgrades to newer versions of Kubernetes, which include bug fixes and new features. By keeping your AKS cluster up to date, you can ensure the stability and security of your applications.

Another important aspect of managing your AKS cluster is the management of node pools. A node pool is a group of nodes within your AKS cluster. By managing node pools, you can have different configurations and sizes for different sets of nodes within your cluster. This allows for greater flexibility and optimization depending on the workload requirements of your applications.

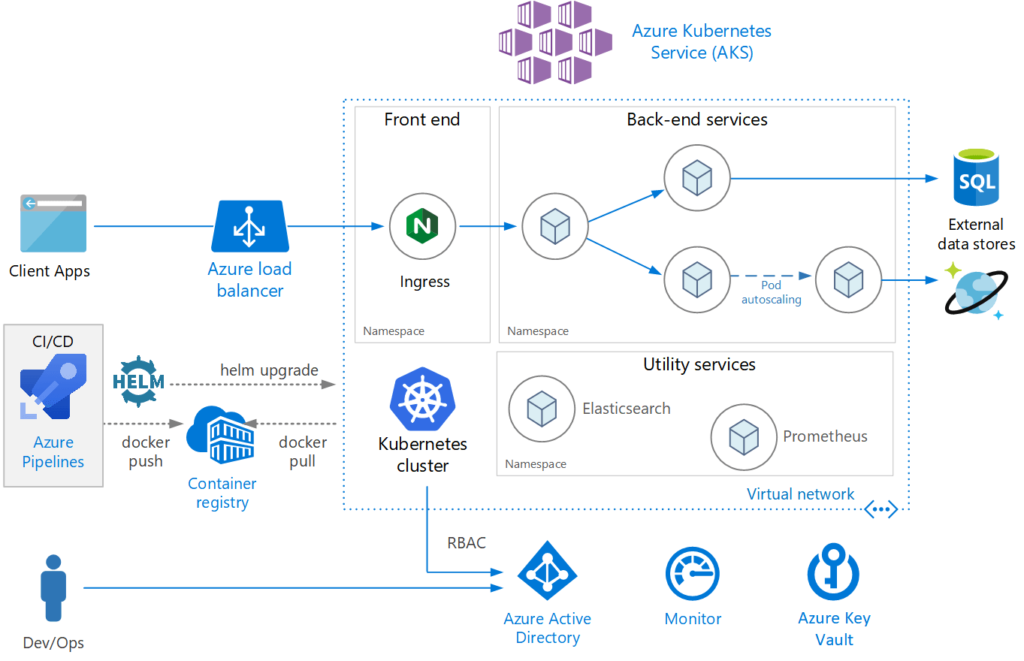

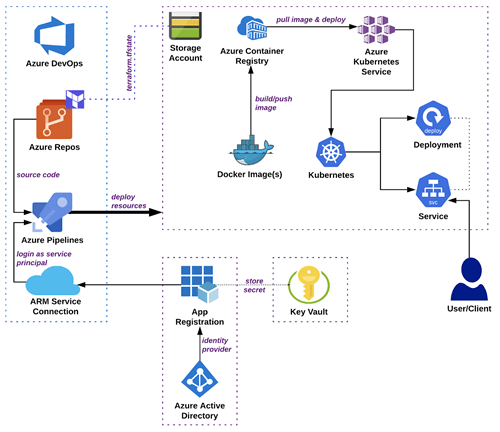

Deploying Applications to AKS

Deploying applications to your AKS cluster involves a few steps. First, you will need to create a Docker image of your application. A Docker image is a lightweight, standalone, executable package that contains everything needed to run your application, including the code, runtime, libraries, and dependencies.

Next, you will push the Docker image to a container registry. A container registry is a centralized location where you can store and manage your Docker images. Azure provides its own container registry, Azure Container Registry, which integrates seamlessly with AKS.

Finally, you can deploy your application to your AKS cluster. This involves creating a Kubernetes deployment or using other Kubernetes resources like pods, services, and ingress controllers. By deploying your application to AKS, you can take advantage of the scaling, load balancing, and self-healing capabilities provided by Kubernetes.

Monitoring and Logging in AKS

Monitoring your AKS clusters is crucial for maintaining their health and performance. Azure provides various monitoring tools and services to help you gain insights into your AKS clusters. Azure Monitor for AKS enables you to monitor the performance and health of your AKS clusters by collecting and analyzing telemetry data. You can monitor key metrics, set up alerts, and visualize the data using Azure Monitor’s dashboards.

In addition to monitoring, it is important to set up logging for your AKS cluster. Logging allows you to capture and analyze the log data generated by your applications running in AKS. By configuring logging, you can track down issues, troubleshoot problems, and gain visibility into the behavior of your applications.

Azure provides integration with popular logging solutions like Azure Monitor Logs, Azure Log Analytics, and Azure Application Insights. By setting up logging in AKS, you can have a centralized location to view and analyze log data from multiple sources.

Scaling Applications in AKS

In addition to scaling your AKS cluster, you can also scale the applications running on it. Kubernetes provides several options for scaling applications within AKS.

Horizontal pod autoscaling (HPA) is a feature of Kubernetes that automatically scales the number of pods in a deployment based on CPU utilization or custom metrics. With HPA, you can define a target CPU utilization or custom metric value, and Kubernetes will automatically adjust the number of pods to maintain that target.

Vertical pod autoscaling (VPA) is another feature that allows you to adjust the CPU and memory resource allocation of pods based on their current resource usage. VPA can automatically adjust the resource requirements of pods to optimize resource utilization and improve performance.

In addition to autoscaling, you can also manually scale your applications by adjusting the number of replicas for a deployment. This gives you more control over the scaling process and allows you to fine-tune the performance of your applications based on your specific requirements.

Updating and Rolling Back Applications in AKS

As your applications evolve, you may need to update them with new features, bug fixes, or security patches. Kubernetes provides a seamless way to update the applications running in AKS.

Updating a deployed application involves creating a new version of the Docker image, modifying the deployment configuration to use the new image, and then rolling out the update to the running pods. Kubernetes supports rolling updates, which means the update is applied gradually to the pods, ensuring high availability and minimizing downtime.

In case an update introduces issues or errors, Kubernetes also provides the ability to roll back to a previous version of the application. This allows you to quickly revert the changes and restore the application to a known working state.

By leveraging Kubernetes’ update and rollback capabilities, you can easily manage the lifecycle of your applications in AKS and ensure that they are always up to date and running smoothly.

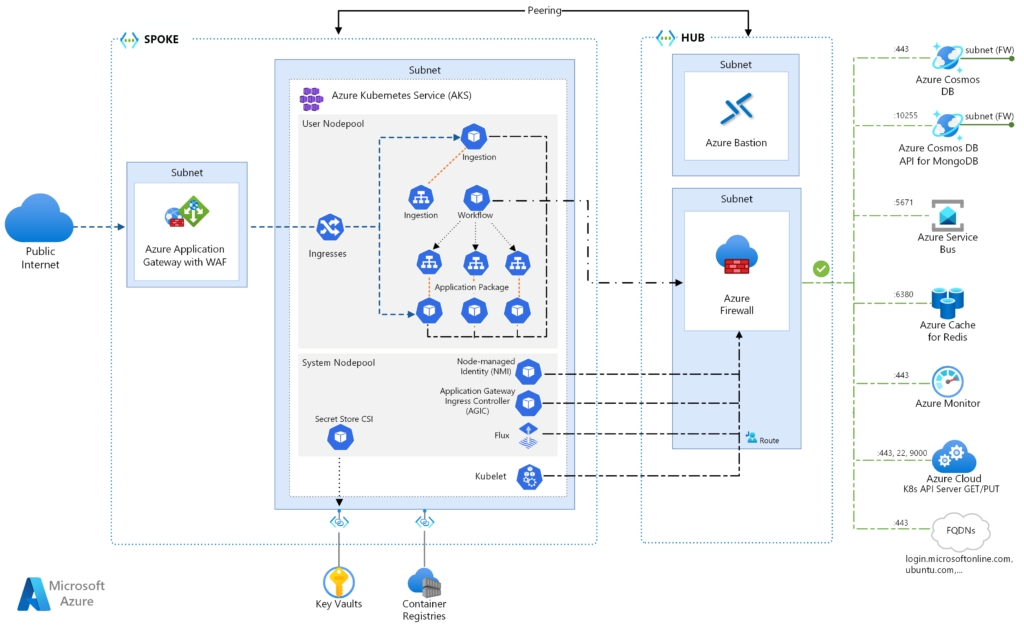

Securing AKS Cluster and Applications

Security is a critical aspect of managing and operating an AKS cluster and the applications running on it. Azure provides several features and best practices to help you secure your AKS cluster and applications.

One important security feature is Role-Based Access Control (RBAC), which allows you to control access to your AKS cluster and its resources. RBAC enables you to assign specific roles and permissions to users, groups, or applications, ensuring that only authorized entities can view or modify resources within the cluster.

Azure Active Directory (Azure AD) integration is another security measure that can be implemented. By integrating Azure AD with your AKS cluster, you can use Azure AD identities to authenticate and authorize users and applications. This provides a more secure and centralized approach to identity and access management within your AKS cluster.

Enabling network policies is also important for securing your AKS cluster. Network policies allow you to define and enforce connectivity rules between pods based on their namespaces or labels. By configuring network policies, you can control the flow of network traffic within your cluster and prevent unauthorized access to sensitive resources.

By implementing these security measures and following best practices, you can ensure that your AKS cluster and applications are protected from unauthorized access and potential security threats.

Networking in AKS

Networking plays a crucial role in connecting and exposing services within an AKS cluster. Azure provides several networking options and features to help you configure and manage the networking aspects of your AKS cluster.

Configuring load balancers is a key networking task when working with AKS. Azure Load Balancer can be used to distribute traffic to the backend nodes in your AKS cluster. With load balancer configurations, you can ensure high availability, scale your applications, and handle incoming traffic efficiently.

Exposing services externally is another important networking consideration. AKS provides different options to expose your applications or services to the outside world. Ingress controllers and Azure Application Gateway can be used to route traffic to the appropriate services within your AKS cluster based on rules and configurations.

Azure Virtual Network integration allows you to connect your AKS cluster securely to your virtual network resources. This integration provides increased security and isolation for your AKS cluster and allows you to leverage Azure’s network security features like network security groups and virtual private networks.

By utilizing these networking features and options, you can effectively configure and manage the connectivity and communication within your AKS cluster.

Stateful Applications in AKS

Stateful applications have specific requirements when it comes to data storage and persistence. Azure Kubernetes Service provides features and resources to support stateful applications running in AKS.

Persistent volumes and persistent volume claims enable you to provision and manage durable storage for your AKS cluster. Persistent volumes are storage options that have a lifecycle independent of any particular pod, allowing data to persist even if a pod is terminated. Persistent volume claims are used to request specific storage resources for a pod and map them to available persistent volumes.

StatefulSet resources are another key component when running stateful applications in AKS. StatefulSets provide guarantees about the ordering and uniqueness of pod deployment and scaling. They also enable the use of stable network identities and persistent storage volumes for each pod.

Data replication and backups should also be considered when dealing with stateful applications. Azure provides various data replication options like Azure Managed Disks and Azure Files, which ensure that your data is durable and available in case of failures. Additionally, you can set up backup and restore processes to protect your data and recover it in case of accidental deletion or data corruption.

By utilizing these features and resources, you can confidently run stateful applications in AKS and ensure data durability and availability.

Troubleshooting and Debugging in AKS

Troubleshooting and debugging are inevitable tasks when working with any complex system, including AKS clusters. Azure provides tools and techniques to help you identify and resolve issues that may arise.

Analyzing AKS logs is an important troubleshooting step. AKS generates various logs, including cluster-level logs, pod logs, and container logs. By analyzing these logs, you can gain insights into the behavior of your applications, diagnose problems, and identify potential bottlenecks or errors.

Common issues that you may encounter in AKS could be related to networking, resource constraints, or misconfigurations. Troubleshooting these issues involves systematically narrowing down the possible causes and identifying the root cause. Azure provides documentation, community forums, and support resources that can assist in troubleshooting common issues and finding solutions.

Additionally, debugging applications running in AKS may be necessary in certain scenarios. Azure provides integration with popular application debugging tools like Visual Studio Code and Azure Monitor. These tools allow you to attach to the pods or containers running your applications, set breakpoints, and analyze the code’s execution for debugging purposes.

By following best practices, utilizing available tools, and seeking support when needed, you can effectively troubleshoot and debug your AKS clusters and applications.