In this article, you will discover the power of Azure Data Factory for achieving seamless data integration and efficient ETL (Extract, Transform, Load) processes. With Azure Data Factory, you can effortlessly orchestrate and automate the movement and transformation of data across various sources, whether they are on-premises or in the cloud. This comprehensive data integration solution empowers organizations to reliably and securely bring together diverse data sets, providing valuable insights that drive informed decision-making. So, get ready to unlock the full potential of your data with Azure Data Factory!

Overview of Azure Data Factory

Introduction to Azure Data Factory

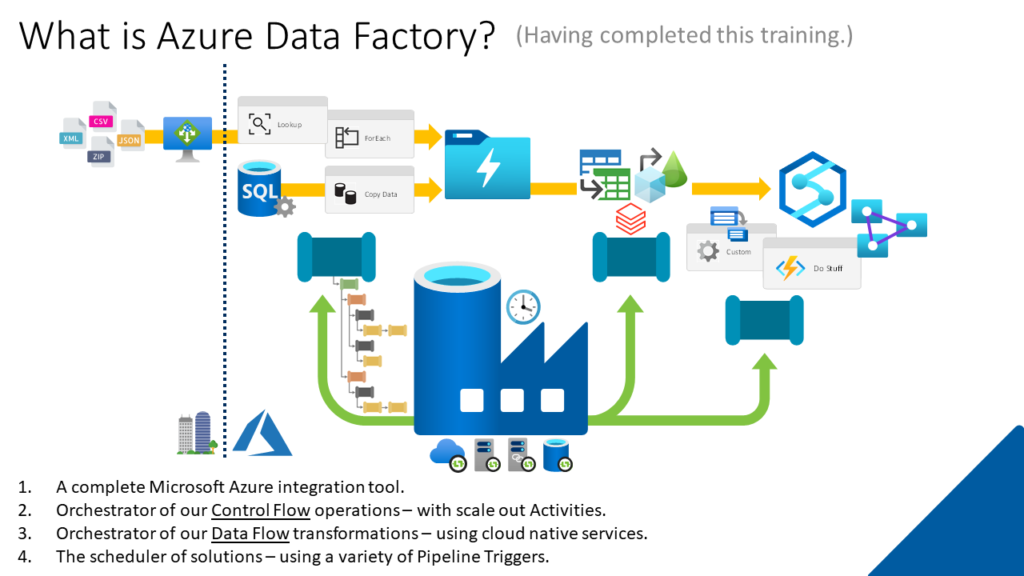

Azure Data Factory is a cloud-based data integration service provided by Microsoft. It enables you to orchestrate and automate data movement and data transformation activities, allowing you to create data pipelines that connect various data sources and destinations. Azure Data Factory simplifies the process of data integration, making it easier to manage and process large volumes of data across different platforms and services.

Key features of Azure Data Factory

Azure Data Factory offers several key features that make it a powerful data integration tool. These features include:

-

Data movement: Azure Data Factory supports the movement of data between on-premises data sources, cloud-based data sources, and hybrid environments. It facilitates seamless data transfers using various connectors and protocols.

-

Data transformation: With Azure Data Factory, you can apply transformations to your data during the integration process. This includes activities such as filtering, sorting, aggregating, and joining datasets to derive meaningful insights and perform data analytics.

-

Data orchestration: Azure Data Factory provides a robust pipeline orchestration capability, allowing you to define and schedule complex data integration workflows. You can create dependencies, parallelism, and conditional activities within your pipelines, enabling you to optimize the flow of data across your systems.

-

Monitoring and logging: Azure Data Factory offers built-in monitoring and logging capabilities that allow you to track the progress and performance of your data integration pipelines. You can monitor execution status, track data lineage, and troubleshoot issues to ensure the smooth functioning of your workflows.

-

Scalability and elasticity: As a cloud-based service, Azure Data Factory provides the scalability and elasticity needed to handle large volumes of data. It can automatically scale up or down based on your workload, ensuring that your data integration processes are efficient and cost-effective.

Data Integration with Azure Data Factory

Understanding data integration

Data integration refers to the process of combining data from different sources into a unified view, ensuring that the data is accurate, consistent, and reliable. In today’s data-driven world, organizations collect data from multiple systems, databases, and applications. Data integration enables businesses to consolidate, transform, and analyze this data to gain valuable insights and make informed decisions.

Importance of data integration

Data integration is crucial for organizations seeking to leverage their data assets effectively. By integrating data from various sources, organizations can eliminate data silos and gain a holistic view of their operations. This enables them to:

-

Improve data quality: Data integration allows organizations to cleanse and standardize their data, ensuring consistency and accuracy. By eliminating duplicates and resolving inconsistencies, businesses can trust the integrity of their data for analysis and reporting.

-

Enable data-driven decision-making: Integrated data provides a comprehensive and accurate picture of business operations, enabling organizations to make informed decisions based on data-driven insights. By combining data from different sources, organizations can uncover valuable correlations and patterns that would be impossible to identify when analyzing data in isolation.

-

Enhance operational efficiency: Data integration simplifies the process of data sharing and collaboration across different departments and systems. By making relevant data available to the right people at the right time, organizations can streamline their operations, improve productivity, and reduce manual effort.

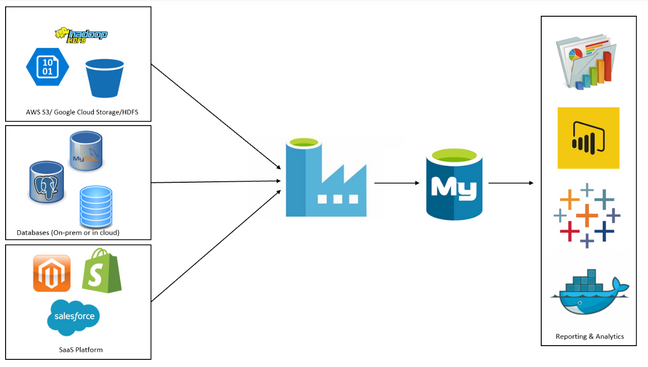

Azure Data Factory as a data integration tool

Azure Data Factory offers a robust and flexible platform for data integration. It allows organizations to connect to a wide range of data sources, transform and process the data, and load it into the desired destination. Azure Data Factory’s integration with other Azure services, such as Azure Synapse Analytics and Azure Machine Learning, further enhances its capabilities for comprehensive data integration solutions.

Azure Data Factory provides a visual interface for designing and orchestrating data integration pipelines. It supports a wide range of data ingestion, preparation, transformation, and movement activities, allowing you to define and automate complex data workflows with ease. With its scalable and secure infrastructure, Azure Data Factory is well-suited for handling large volumes of data and performing data integration at scale.

Components of Azure Data Factory

Data factory

The data factory is the top-level management entity in Azure Data Factory. It acts as a container for organizing and managing all the resources associated with your data integration solution. Within a data factory, you can define and configure pipelines, data flows, linked services, triggers, integration runtimes, and other components.

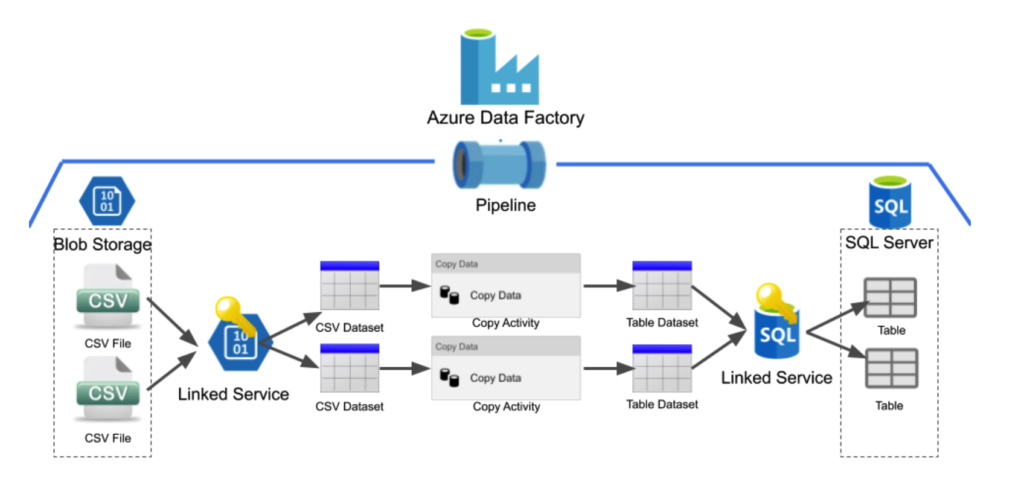

Pipeline

A pipeline comprises a set of interconnected activities that define the flow of data in your data integration solution. It represents a sequence of steps that are executed in a specific order to accomplish a specific data integration task. Pipelines can include activities for data ingestion, transformation, movement, and other data integration operations.

Activity

An activity represents a single action within a pipeline. It can be a data transformation activity, a data movement activity, or a control activity. Activities define the individual tasks that need to be performed to complete a specific operation within a pipeline.

Data flow

A data flow is a visually designed data transformation logic that operates on datasets. It allows you to visually build data transformations using a code-free experience. Data flows provide a scalable and efficient way to transform and shape data within Azure Data Factory.

Linked services

A linked service is a connection to an external data source or a destination that you want to interact with in your data integration solution. It contains the necessary information to establish a connection, such as authentication credentials and connection properties. Linked services enable Azure Data Factory to interact with various data sources, including databases, file systems, and cloud storage services.

Trigger

A trigger is a mechanism that initiates the execution of a pipeline or a data flow. Triggers can be scheduled to run at specific times or based on pre-defined conditions. They enable the automation of data integration workflows by initiating the execution of pipelines or data flows based on specified events or schedules.

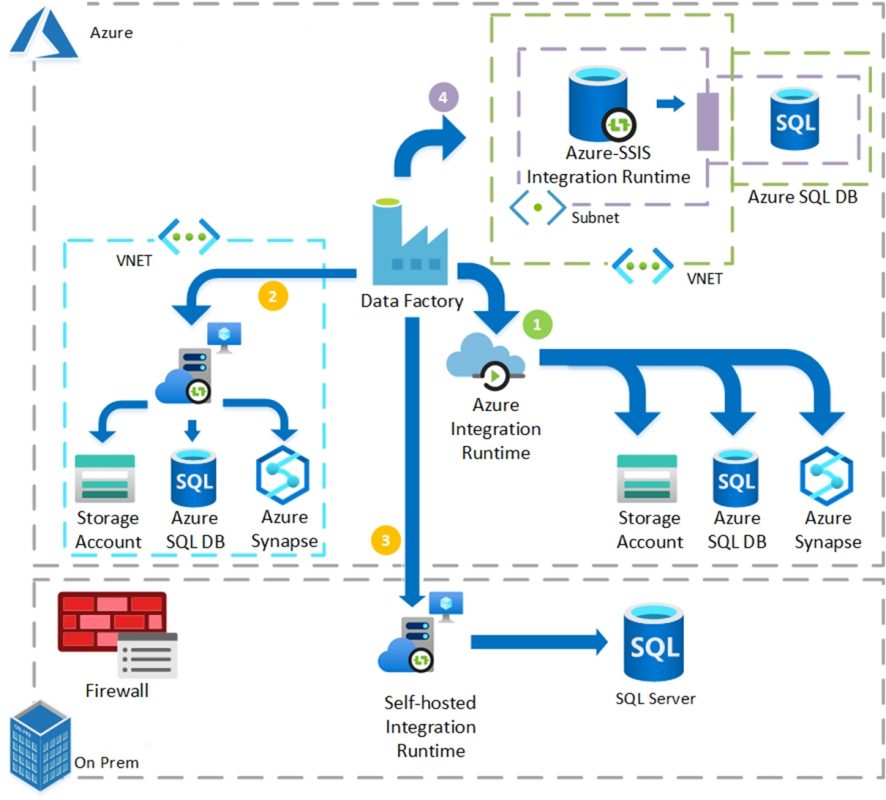

Integration Runtime

Integration Runtime (IR) is the compute infrastructure used by Azure Data Factory to perform data integration activities. It provides the necessary resources for executing data integration tasks, such as data movement and data transformation. Integration Runtime can be an Azure-hosted or self-hosted compute environment, depending on your requirements and connectivity options.

Data Integration Process in Azure Data Factory

Data ingestion

Data ingestion is the process of collecting and importing data from various sources into Azure Data Factory. Azure Data Factory supports data ingestion from various sources, including on-premises databases, cloud storage services, and software-as-a-service (SaaS) applications. During the data ingestion process, the data is extracted from the source system, transported to Azure Data Factory, and stored in a temporary storage location.

Data preparation

Data preparation involves cleaning, transforming, and validating the ingested data to ensure its consistency and quality. Azure Data Factory provides a range of data transformation activities that facilitate data preparation, such as filtering, sorting, aggregating, and joining datasets. This ensures that the data is accurate, complete, and ready for further processing.

Data transformation

Data transformation involves manipulating and enriching the data to derive meaningful insights and perform data analytics. Azure Data Factory offers a wide range of data transformation activities, including data mapping, data conversion, data enrichment, and data masking. These activities enable you to transform the data based on your specific business requirements and create a unified and consistent view of your data.

Data movement

Data movement refers to the process of transferring data from the source to the destination. Azure Data Factory supports data movement across various platforms and services, including on-premises systems, cloud-based systems, and hybrid environments. It provides built-in connectors for popular data sources and destinations, enabling seamless and efficient data transfers.

Creating Data Integration Pipelines

Designing a data integration pipeline

Designing a data integration pipeline involves mapping out the flow of data from the source to the destination. Azure Data Factory provides a visual interface that allows you to drag and drop activities, define dependencies, and configure execution settings. You can define the sequence of activities, specify the input and output datasets, and configure data transformations and movements to create a comprehensive data integration pipeline.

Defining data sources

Defining data sources involves specifying the connections to the systems from which you want to extract data. This includes providing the required credentials, connection properties, and other relevant information. Azure Data Factory supports a wide range of data sources, including on-premises databases, cloud-based storage services, and SaaS applications.

Setting up data transformations

Data transformations are configured within the activities in your pipeline. Azure Data Factory provides a rich set of built-in data transformation activities that can be used to manipulate and enrich your data. These activities can be visually configured to apply filters, aggregations, joins, and other operations on datasets. By configuring data transformations, you can tailor the data to meet your specific business needs.

Configuring data sinks

Data sinks represent the destinations where the transformed data is stored. This can be a database, a file system, or a cloud storage service. Azure Data Factory allows you to configure the properties of the data sink, such as the connection details, authentication credentials, and storage settings. By configuring data sinks, you can determine where the data will be loaded and how it will be stored for future analysis and reporting.

Mapping Data Flows in Azure Data Factory

What are data flows?

Data flows in Azure Data Factory provide a visual environment for building data transformation logic. They enable you to visually create and configure data transformations using a code-free experience. Data flows offer a range of transformations, such as joins, aggregations, pivots, and data type conversions, that can be applied to datasets.

Building data flows in Azure Data Factory

Building data flows involves creating a visual representation of the data transformation logic. You can drag and drop transformation activities onto the canvas and connect them to define the flow of data. Data flows provide a visual interface for configuring data mappings, data transformations, and data conversions. This allows you to create complex data transformations without writing any code.

Transformation operations in data flows

Data flows support a wide range of transformation operations that can be applied to datasets. These include filtering, sorting, aggregating, merging, and pivoting data. Data flows also support advanced data transformation operations, such as data type conversions, conditional expressions, and data masking. These operations can be configured using a visual interface, making it easy to define and customize the data transformation logic.

Data Integration Best Practices

Designing for scalability and performance

When designing data integration pipelines, it is important to consider scalability and performance. Here are some best practices to follow:

-

Partition data: If you are dealing with large volumes of data, consider partitioning the data to improve parallel processing. This allows for faster and more efficient data movement and transformation.

-

Use appropriate data types: Choosing the right data types for your datasets can improve both performance and storage efficiency. Avoid using larger data types than necessary and ensure data consistency across the pipeline.

-

Optimize data flow: Analyze the data flow within your pipeline and identify any areas that may cause performance bottlenecks. Consider using caching, filtering, and aggregation techniques to optimize data movement and transformation.

Monitoring and troubleshooting data integration pipelines

Monitoring and troubleshooting are essential aspects of managing data integration pipelines. Here are some best practices to ensure smooth operation:

-

Monitor pipeline execution: Utilize the monitoring capabilities of Azure Data Factory to track the execution of pipelines. Monitor execution status, data flow, and any errors or exceptions that occur. This allows you to identify and resolve issues promptly.

-

Implement logging and alerting: Enable logging and alerting features to capture important information about pipeline executions. This helps in identifying any performance issues, data validation failures, or anomalies that may occur during the data integration process.

-

Implement error handling and retry mechanisms: Design your pipelines to handle errors gracefully. Implement error handling and retry mechanisms to automatically recover from transient failures and ensure the reliability of your data integration processes.

Security considerations for data integration in Azure Data Factory

When working with sensitive data, it is important to consider security. Here are some best practices for ensuring data security in Azure Data Factory:

-

Secure credentials: Use Azure Key Vault to securely store and manage credentials and sensitive information required for accessing data sources and destinations. This ensures that credentials are not exposed and can be centrally managed.

-

Implement data encryption: Enable encryption for data at rest and in transit. This ensures that the data remains protected throughout the data integration process.

-

Implement access controls: Utilize Azure RBAC (Role-Based Access Control) to control access to Azure Data Factory resources. Apply the principle of least privilege to grant only the necessary permissions to users and groups.

Connectivity and Integration with External Systems

Connecting to on-premises data sources

Azure Data Factory provides connectivity options for connecting to on-premises data sources. You can set up a self-hosted integration runtime, which allows Azure Data Factory to securely access data within your on-premises network. This enables seamless integration with your existing infrastructure and allows you to leverage data from on-premises systems in your data integration pipelines.

Integration with Azure Synapse Analytics

Azure Data Factory offers integration with Azure Synapse Analytics, a powerful analytics service that enables data warehousing, big data processing, and data integration. You can utilize Azure Data Factory to move data to and from Azure Synapse Analytics, enabling you to leverage the capabilities of both services for comprehensive data integration and analytics.

Connecting to cloud-based data sources

Azure Data Factory provides a wide range of connectors for connecting to cloud-based data sources, such as Azure Blob Storage, Azure Data Lake Storage, Azure SQL Database, and Azure Cosmos DB. These connectors allow you to seamlessly integrate with cloud-based services and extract data for further processing and analysis.

Data Integration Challenges and Solutions

Common challenges in data integration

Data integration can pose several challenges, including:

-

Data complexity: Organizations often deal with diverse and complex data formats, making it challenging to integrate data from different sources.

-

Data volume: The ever-increasing volume of data can cause performance and scalability issues in data integration processes.

-

Data quality and consistency: Ensuring the quality and consistency of data can be a significant challenge, as data may be stored in different formats and have inconsistent standards.

Addressing data integration challenges with Azure Data Factory

Azure Data Factory provides solutions to these challenges through its robust features and functionalities:

-

Diverse data connectivity: Azure Data Factory supports a wide range of connectors to connect to various data sources. This allows organizations to integrate data from diverse sources into a unified view.

-

Scalability and performance: Azure Data Factory’s scalable infrastructure ensures that it can handle large volumes of data and execute data integration processes efficiently.

-

Data quality and consistency: Azure Data Factory provides data transformation capabilities that enable organizations to standardize and cleanse data during the integration process. This ensures data accuracy and consistent formats across the pipeline.

Optimizing data integration performance

To optimize data integration performance, consider the following:

-

Use proper indexing: Ensure that your data sources and destination systems are properly indexed to improve query performance. This can significantly enhance data movement and transformation speeds.

-

Parallelize data processing: Leverage Azure Data Factory’s ability to process data in parallel by partitioning the data and distributing it across multiple nodes. This improves overall performance by utilizing available resources efficiently.

-

Use optimized data formats: Consider transforming your data into optimized formats, such as Parquet or ORC, to improve data compression, storage efficiency, and query performance.

Conclusion

Summary of the benefits of data integration with Azure Data Factory

Azure Data Factory enables organizations to achieve seamless data integration by providing a comprehensive set of features and functionalities. By leveraging Azure Data Factory, organizations can:

-

Consolidate data from various sources into a unified view, eliminating data silos and enabling data-driven decision-making.

-

Cleanse, transform, and enrich data to ensure its accuracy, consistency, and reliability.

-

Orchestrate and automate data integration workflows, reducing manual effort and increasing operational efficiency.

-

Scale and handle large volumes of data efficiently and cost-effectively.

Future trends in data integration

The field of data integration continues to evolve rapidly, driven by technological advancements and changing business requirements. Some future trends in data integration include:

-

Real-time data integration: Increasingly, organizations require real-time data access and analysis. Future data integration solutions will need to provide efficient methods for continuous data ingestion and processing in real-time.

-

AI-powered data integration: Artificial intelligence (AI) and machine learning (ML) technologies are being integrated into data integration tools to automate data mapping, transformation, and quality control processes. This enables more accurate and efficient data integration workflows.

-

Hybrid and multi-cloud data integration: As organizations adopt hybrid and multi-cloud architectures, data integration solutions will need to seamlessly integrate data from various cloud and on-premises sources, providing a unified view and enabling seamless data movement across different environments.

In conclusion, Azure Data Factory offers a comprehensive data integration platform that addresses the challenges of modern data integration. With its robust features, scalability, and seamless integration with other Azure services, Azure Data Factory empowers organizations to build efficient and reliable data integration pipelines, enabling them to unlock the full potential of their data assets and make smarter, data-driven decisions.