So you’ve decided to embrace the power of containerization and take your application management to the next level, but where do you start? Look no further than our comprehensive guide to managing containerized applications on GCP Kubernetes Engine. Whether you are a beginner exploring the world of containers or an experienced developer looking for best practices and advanced tips, this guide has got you covered. From understanding the basics of containerization to deploying and scaling applications on the Google Cloud Platform’s Kubernetes Engine, we will walk you through the entire process, ensuring that you can harness the full potential of this powerful platform. Get ready to embark on a journey to efficient and seamless application management on GCP Kubernetes Engine.

1. What is GCP Kubernetes Engine?

1.1 Overview

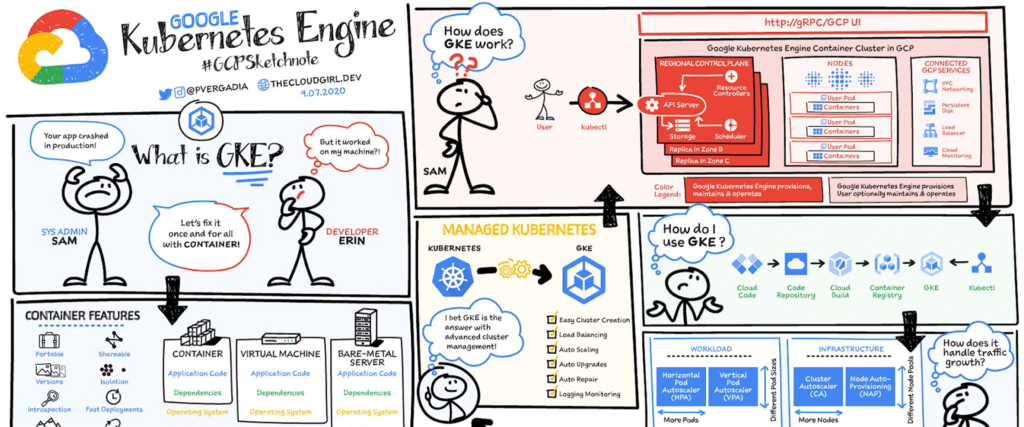

GCP Kubernetes Engine is a managed environment provided by Google Cloud Platform (GCP) for deploying, managing and scaling containerized applications using Kubernetes. It offers a reliable, scalable, and secure infrastructure for running containers and simplifies the process of managing containerized applications.

1.2 Features

GCP Kubernetes Engine provides a rich set of features that make it easy to deploy and manage containerized applications. These features include:

-

Automated provisioning: GCP Kubernetes Engine automatically provisions and manages the underlying infrastructure required to run the containers, allowing developers to focus on building and deploying their applications.

-

Scalability: Kubernetes Engine allows you to scale your applications horizontally by adding or removing replicas of your containers based on demand. This ensures that your applications can handle increased traffic and workload without any downtime.

-

High availability: GCP Kubernetes Engine ensures high availability of your applications by automatically replicating containers across multiple nodes in a cluster. If one node fails, the containers are automatically rescheduled on other healthy nodes.

-

Kubernetes ecosystem: GCP Kubernetes Engine integrates seamlessly with the Kubernetes ecosystem, enabling you to leverage a wide range of tools and services such as Kubernetes Dashboard, Helm, Prometheus, and Istio.

1.3 Benefits

Deploying and managing containerized applications on GCP Kubernetes Engine offers several benefits:

-

Scalability: GCP Kubernetes Engine enables you to scale your applications seamlessly, allowing you to handle increased traffic and workload without any manual intervention.

-

Reliability: By leveraging Kubernetes and its inherent features, Kubernetes Engine ensures that your applications are highly available and fault-tolerant. It automatically handles failures and reschedules containers on healthy nodes to maintain the desired state.

-

Security: GCP Kubernetes Engine provides built-in security features such as network policies, RBAC (Role-Based Access Control), and security policies, ensuring that your applications and cluster are secure.

-

Cost-effective: With GCP Kubernetes Engine, you only pay for the resources you use, making it a cost-effective solution for running containerized applications. It also offers features like autoscaling and spot instances that help optimize costs without compromising on performance.

-

Easy management: GCP Kubernetes Engine simplifies the management of containerized applications by automating many tasks such as provisioning, scaling, and monitoring. It provides a user-friendly interface and command-line tools to manage and monitor your applications easily.

2. Deploying Containers on GCP Kubernetes Engine

2.1 Setting up GCP Kubernetes Engine

Before you can start deploying containers on GCP Kubernetes Engine, you need to set up your environment. This involves creating a GCP project, enabling the Kubernetes Engine API, and configuring your development environment by installing the necessary tools and libraries.

2.2 Creating a Kubernetes cluster

Once your environment is set up, you can create a Kubernetes cluster on GCP Kubernetes Engine. A cluster is a group of virtual machines, called nodes, that run your containers. You can customize the cluster’s size, node machine type, and other parameters based on your application requirements.

2.3 Defining container specifications

To deploy containers on GCP Kubernetes Engine, you need to define the specifications for each container. This includes specifying the container image, resource requirements, environment variables, and any other necessary configuration.

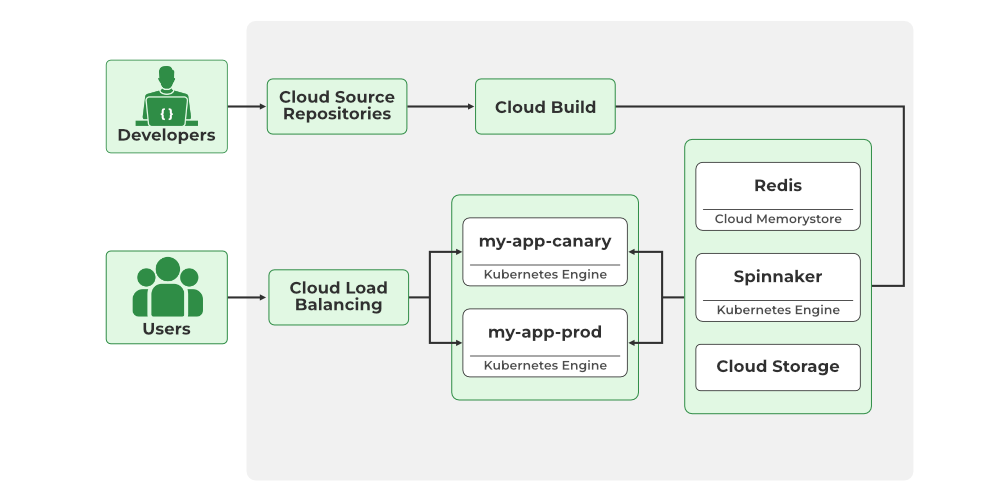

2.4 Deploying containers

Once you have defined the container specifications, you can deploy them to your Kubernetes cluster. Kubernetes Engine provides different deployment options, such as rolling updates and canary deployments, to ensure seamless deployment and minimize downtime.

2.5 Scaling containers

One of the key benefits of using GCP Kubernetes Engine is the ability to scale your containers dynamically based on demand. You can increase or decrease the number of container replicas to handle changes in traffic or workload. Kubernetes Engine provides features like horizontal pod autoscaling and cluster autoscaling to automate this process.

3. Monitoring and Logging

3.1 Monitoring containerized applications

Monitoring is an essential part of managing containerized applications. GCP Kubernetes Engine integrates with Google Cloud Monitoring, allowing you to monitor the health, performance, and availability of your containers and clusters. You can set up custom metrics, alerts, and dashboards to gain insights into your application’s behavior.

3.2 Collecting logs

In addition to monitoring, GCP Kubernetes Engine also allows you to collect logs from your containers and cluster. Logs are essential for troubleshooting and auditing purposes. Kubernetes Engine integrates with Google Cloud Logging, which enables you to centralize and analyze logs from your containers and other services.

3.3 Analyzing logs

Once logs are collected, you can use various tools, such as Google Cloud’s Log Viewer and Stackdriver Debugger, to analyze them and identify any issues or anomalies. These tools provide powerful search and filtering capabilities, making it easier to pinpoint the root cause of a problem.

3.4 Troubleshooting

When issues arise, Kubernetes Engine provides several troubleshooting features to help you resolve them quickly. These include container lifecycle troubleshooting, cluster debugging, and the ability to SSH into individual nodes for deeper inspection. By leveraging these features, you can identify and fix issues in your containerized applications effectively.

4. Managing Traffic

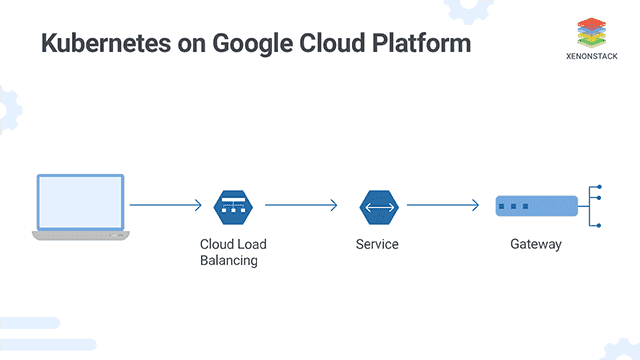

4.1 Load balancing

GCP Kubernetes Engine offers built-in load balancing capabilities to distribute incoming traffic across your containers. You can configure different load balancing options based on your application’s requirements, such as HTTP(S) load balancing, TCP load balancing, and internal load balancing.

4.2 Ingress and egress control

To control inbound and outbound traffic to your containers, you can use Kubernetes Engine’s Ingress and egress control features. Ingress allows you to define rules for routing external traffic to specific services or containers, while egress control enables you to restrict outbound traffic and enforce security policies.

4.3 Service discovery

Service discovery is crucial for containerized applications deployed on Kubernetes Engine. Kubernetes provides a built-in DNS mechanism that allows containers to discover and communicate with each other using service names instead of hardcoding IP addresses. This makes it easier to manage and scale your applications.

5. Configuring Network Policies

5.1 Network policy basics

Network policies allow you to define and enforce rules governing network traffic within your Kubernetes cluster. These policies help you control inbound and outbound traffic based on criteria such as IP address, port, and protocol. With network policies, you can enhance the security and isolation of your containerized applications.

5.2 Defining network policies

To define network policies, you can use Kubernetes’ NetworkPolicy API. This allows you to specify which pods can communicate with each other and which pods are restricted from accessing certain resources. Network policies can be applied at the namespace level, providing granular control over network traffic within your cluster.

5.3 Enforcing network policies

Once network policies are defined, Kubernetes Engine enforces them by inspecting network traffic and matching it against the defined policies. If a packet violates the policy, Kubernetes Engine blocks or allows it based on the policy rules. This helps protect your containerized applications from unauthorized access and potential security breaches.

6. Managing Storage

6.1 Persistent volumes and claims

Containerized applications often require persistent storage for storing data that needs to be retained even if a container restarts or is moved. GCP Kubernetes Engine provides persistent volumes and claims, which allow you to map a persistent volume to a specific container and ensure that the data is retained across container lifecycle events.

6.2 Dynamic provisioning

Dynamic provisioning is a feature of Kubernetes Engine that automates the process of creating storage resources, such as persistent volumes, as and when they are needed. With dynamic provisioning, you don’t have to manually provision the storage resources, reducing the administrative overhead and making it easier to manage storage for your containers.

6.3 Snapshotting and cloning

In addition to dynamic provisioning, GCP Kubernetes Engine also supports snapshotting and cloning of persistent volumes. Snapshots allow you to create point-in-time copies of your volumes, which can be used for backups or testing purposes. Cloning enables you to create new persistent volumes from existing ones, saving time and resources when provisioning storage for new containers.

7. Security Best Practices

7.1 Securing the Kubernetes cluster

Securing your Kubernetes cluster is essential to protect your containerized applications from unauthorized access and potential security vulnerabilities. Some best practices for securing your Kubernetes cluster on GCP Kubernetes Engine include enabling RBAC, using network policies to limit access, restricting container privileges, and regularly applying security patches and updates.

7.2 Securing containerized applications

In addition to securing the cluster, it is crucial to implement security measures at the application level. This includes container hardening by removing unnecessary services, using secure container images, and scanning container images for vulnerabilities. GCP Kubernetes Engine integrates with the Cloud Security Command Center to help you identify and mitigate security risks in your containerized applications.

7.3 Implementing RBAC

Role-Based Access Control (RBAC) is a key security feature provided by Kubernetes Engine that allows you to define granular access control policies for users and groups. RBAC enables you to assign roles and permissions to users based on their responsibilities, ensuring that only authorized users have access to the cluster and its resources.

7.4 Using security policies

Kubernetes Engine provides security policies that allow you to define and enforce security controls for your cluster and applications. These policies help you implement best practices such as enforcing container image admission checks, enabling network policies, and restricting privileged containers. By using security policies, you can ensure that your containerized applications are running in a secure and compliant environment.

8. Upgrading and Patching

8.1 Upgrading Kubernetes cluster

Regularly upgrading your Kubernetes cluster is crucial to ensure that you have the latest features, bug fixes, and security patches. GCP Kubernetes Engine provides an automated upgrade process that minimizes downtime and ensures a smooth transition to the new version. You can schedule automatic upgrades or manually trigger upgrades based on your requirements.

8.2 Upgrading containerized applications

In addition to upgrading the cluster, it is important to keep your containerized applications up to date. Kubernetes Engine provides rolling updates, which allow you to update your deployment without any downtime. During a rolling update, a new version of the container image is gradually deployed while the old version is gracefully terminated, ensuring seamless availability of your applications.

8.3 Patching vulnerabilities

With the constantly evolving threat landscape, it is crucial to patch any known vulnerabilities in your containerized applications. GCP Kubernetes Engine provides automated vulnerability scanning and notifications, allowing you to identify vulnerabilities in your container images. By regularly patching vulnerabilities and updating your container images, you can ensure the security and integrity of your applications.

9. Backup and Disaster Recovery

9.1 Data backup strategies

Data backup is a critical aspect of managing containerized applications on GCP Kubernetes Engine. You should have a well-defined backup strategy that includes regular backups of your persistent volumes, databases, and other critical data. You can leverage GCP’s backup solutions, such as Cloud Storage or Cloud SQL, to implement reliable and automated backup mechanisms.

9.2 Disaster recovery planning

Disaster recovery planning is essential to ensure business continuity in the event of a catastrophic failure. GCP Kubernetes Engine provides features such as regional clusters and multi-regional clusters that allow you to replicate and distribute your applications across multiple geographic locations. By designing a robust disaster recovery plan and leveraging these features, you can minimize downtime and data loss in case of a disaster.

9.3 Backup and recovery tools on GCP

GCP offers a wide range of backup and recovery tools that you can use in conjunction with GCP Kubernetes Engine. These tools include Cloud Storage for data backups, Cloud SQL for database backups, and Google Cloud CDN for content delivery. By utilizing these tools, you can implement a comprehensive backup and recovery strategy for your containerized applications.

10. Cost Optimization

10.1 Right-sizing containers

To optimize costs, it is important to right-size your containers based on their resource requirements. GCP Kubernetes Engine allows you to specify the CPU and memory limits for your containers, enabling you to allocate resources efficiently. By accurately determining the resource needs of your applications, you can avoid overprovisioning and reduce costs.

10.2 Autoscaling

Autoscaling is a powerful feature provided by Kubernetes Engine that allows you to automatically adjust the number of container replicas based on demand. By setting up autoscaling rules, you can ensure that your applications have sufficient resources during peak usage and scale down during periods of low activity. Autoscaling helps optimize costs by dynamically allocating resources as needed.

10.3 Resource quotas

Resource quotas allow you to set limits on the amount of resources that can be consumed by your containers and clusters. By setting appropriate resource quotas, you can prevent resource wastage and control costs. GCP Kubernetes Engine provides tools and metrics to monitor resource usage and adjust quotas based on actual needs.

10.4 Spot instances

Spot instances are preemptible virtual machines that provide significant cost savings compared to regular instances. GCP Kubernetes Engine allows you to leverage spot instances for running your containers, enabling you to achieve cost optimization without sacrificing performance. By combining spot instances with autoscaling, you can take advantage of price fluctuations and further reduce your infrastructure costs.

In conclusion, GCP Kubernetes Engine offers a comprehensive platform for managing containerized applications. From deployment to monitoring, security to cost optimization, GCP Kubernetes Engine provides a range of features and tools to simplify the management and operation of containerized applications. By leveraging the power of Kubernetes and GCP’s infrastructure, you can build and scale your applications with ease, ensuring high availability, security, and cost efficiency.