Are you tired of constantly overspending on your cloud expenses? Look no further! In this article, we will share with you 10 GCP cost optimization strategies that will help you save big on your cloud expenses. From rightsizing virtual machines to leveraging managed services, these strategies will enable you to optimize your cloud costs without compromising on performance or reliability. So, if you’re ready to maximize your savings on Google Cloud Platform, read on to discover the key cost optimization techniques that will revolutionize your cloud spending.

1. Right-sizing Instances

When it comes to optimizing costs in the cloud, one of the first areas to look at is right-sizing instances. It’s important to analyze the usage of your instances to determine if they are appropriately sized for your workload. This involves evaluating factors such as CPU, memory, and disk utilization.

1.1 Analyzing Instance Usage

Before making any adjustments to your instances, it’s crucial to gather data on their usage. This can be done by utilizing monitoring tools provided by GCP or by using third-party solutions. By analyzing the instance usage data, you can gain insights into how the resources are being utilized and identify any potential areas for optimization.

1.2 Identifying Over-provisioned Instances

Over-provisioning instances is a common challenge in cloud environments, where resources are often allocated without adequate consideration of actual usage needs. By analyzing the instance usage data, you can identify instances that are consistently underutilized or have excess capacity. These over-provisioned instances can significantly impact your cloud costs.

1.3 Downgrading or Resizing Instances

Once you have identified over-provisioned instances, it’s time to take action. Downgrading or resizing instances to match the actual workload can help reduce costs without compromising performance. By carefully analyzing the utilization metrics, you can accurately determine the optimal size for your instances and adjust accordingly.

1.4 Utilizing Preemptible VM Instances

Another cost-saving option to consider is utilizing preemptible VM instances. These instances offer a significant discount compared to regular instances, with the trade-off being that they can be terminated by Google at any time with a 30-second warning. However, they are ideal for fault-tolerant and flexible workloads that can handle interruptions. By incorporating preemptible VM instances into your architecture, you can further optimize your costs.

2. Resource Utilization Monitoring

Monitoring resource utilization is essential for effective cost optimization. By implementing monitoring tools and analyzing resource usage metrics, you can gain valuable insights into how your resources are being utilized and identify any underutilized resources.

2.1 Implementing Monitoring Tools

GCP offers various monitoring tools, such as Stackdriver Monitoring, which provides real-time insights into the performance and health of your cloud resources. By implementing these tools, you can collect and analyze data on various metrics, such as CPU usage, network traffic, and disk usage. This data can then be used to identify areas where resources are either overutilized or underutilized.

2.2 Analyzing Resource Usage Metrics

Once you have implemented monitoring tools and collected data on resource usage, it’s time to analyze the metrics. Look for patterns and trends in the data to identify any instances or resources that are consistently underutilized. By understanding how your resources are being used, you can make informed decisions to optimize resource allocation and reduce costs.

2.3 Identifying Underutilized Resources

One of the key benefits of resource utilization monitoring is the ability to identify underutilized resources. These resources may be allocated but not actively used, resulting in wasted costs. By identifying these underutilized resources, you can take appropriate actions such as downsizing instances, rightsizing storage, or even decommissioning unnecessary resources altogether.

3. Autoscaling

Autoscaling is a powerful cost optimization strategy that allows you to automatically adjust the number of resources based on demand. By implementing autoscaling policies and utilizing managed instance groups, you can ensure that your resources are dynamically scaled up or down to match the workload.

3.1 Implementing Autoscaling Policies

To effectively implement autoscaling, you need to define policies that determine when and how resources should scale. This involves setting thresholds for metrics such as CPU utilization, network traffic, or request latency. When these thresholds are exceeded, autoscaling kicks in to add or remove resources automatically. By dynamically adjusting the resources based on real-time demand, you can optimize costs while maintaining performance.

3.2 Scaling Based on Demand

One of the key benefits of autoscaling is the ability to scale resources based on demand. During periods of high traffic or workload intensity, resources can automatically scale up to handle the increased demand. Conversely, during periods of low demand, resources can be scaled down, ensuring that you only pay for what you actually use. This elasticity allows you to optimize costs without sacrificing performance.

3.3 Utilizing Managed Instance Groups

Managed instance groups are a valuable tool for implementing autoscaling. They provide the ability to automatically manage and distribute instances across multiple zones or regions. By utilizing managed instance groups, you can ensure that your autoscaling policies are applied consistently and efficiently. This helps to streamline the management of resources and further optimize costs.

4. Usage Scheduling

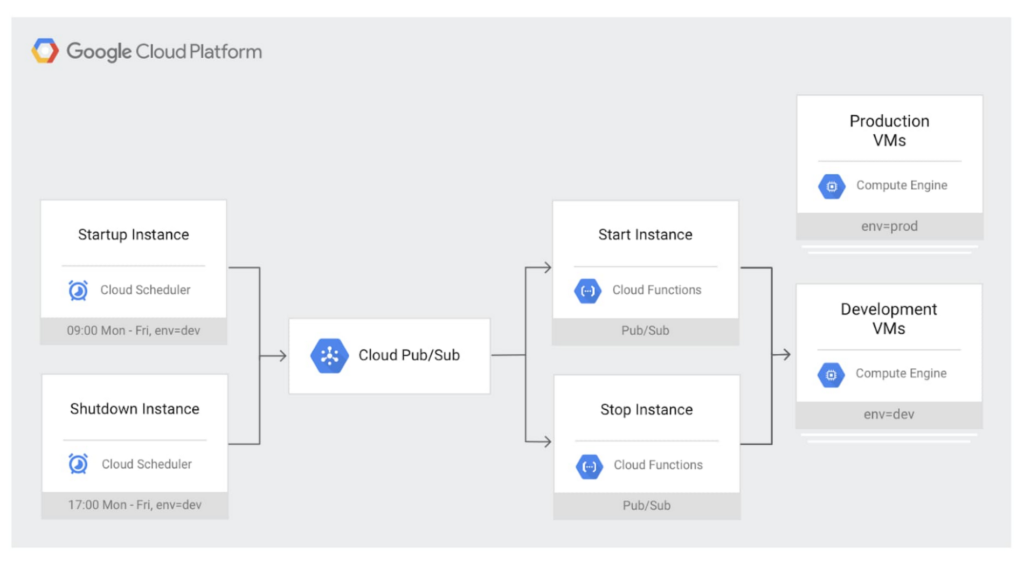

Utilizing usage scheduling strategies can help optimize costs by taking advantage of non-critical times when resources can be temporarily stopped or scaled down. By identifying these non-critical times and automating start/stop actions, you can effectively reduce costs without impacting performance.

4.1 Identifying Non-critical Times

The first step in usage scheduling is identifying non-critical times when resources can be temporarily stopped or scaled down. This involves understanding your workload patterns and identifying periods of low demand or when resources are not actively required. By analyzing historical usage data and considering factors such as user activity patterns, you can determine the optimal times for scheduling resource actions.

4.2 Automating Start/Stop

Once you have identified the non-critical times, the next step is to automate the start/stop actions of your resources. This can be achieved by leveraging scheduling tools provided by GCP or using custom scripts. By automating these actions, you can ensure that resources are only active when needed, reducing costs during idle periods.

4.3 Utilizing Scheduling Tools

GCP provides scheduling tools, such as Compute Engine schedules and Cloud Scheduler, which allow you to easily automate resource start/stop actions. These tools provide flexibility and granularity in defining schedules, allowing you to specify when resources should start or stop at a specific time or interval. By utilizing these scheduling tools, you can simplify the management of your resources and optimize costs based on your specific requirements.

5. Choosing the Right Storage Class

Choosing the right storage class for your data can have a significant impact on your cloud costs. Understanding the different GCP storage options, determining the optimal storage class, and transitioning data to lower-cost storage are all important steps in optimizing storage costs.

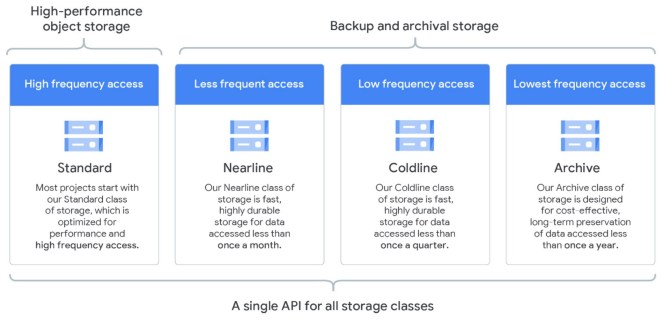

5.1 Understanding GCP Storage Options

GCP offers a range of storage options, each designed to meet specific requirements and cost considerations. These options include Standard Storage, Nearline Storage, Coldline Storage, and Archive Storage. By understanding the characteristics and pricing of each storage option, you can make informed decisions on which storage class to utilize for different types of data.

5.2 Determining Optimal Storage Class

To determine the optimal storage class for your data, consider factors such as access frequency, data durability requirements, and cost sensitivity. For frequently accessed data, Standard Storage may be the most suitable option, while for infrequently accessed or long-term storage, Nearline Storage, Coldline Storage, or Archive Storage could be more cost-effective. By matching the storage class to the usage patterns of your data, you can reduce costs while meeting your storage needs.

5.3 Transitioning Data to Lower-cost Storage

As data ages or access frequencies change, it’s important to regularly reassess and transition data to lower-cost storage classes. GCP provides tools and features, such as lifecycle management policies, that automate the transition of data between storage classes based on predefined rules. By leveraging these features, you can optimize storage costs by keeping frequently accessed data in more expensive storage classes and moving less frequently accessed data to lower-cost options.

6. Optimizing Networking Costs

Network traffic and egress costs can be a significant portion of your cloud expenses. By analyzing network traffic, utilizing VPC peering, minimizing egress costs, and leveraging content delivery network (CDN) services, you can effectively optimize your networking costs.

6.1 Analyzing Network Traffic

Understanding your network traffic patterns is an important step in optimizing networking costs. By monitoring and analyzing network traffic data, you can gain insights into the volume and direction of data transfer. This can help you identify any high-traffic areas that may be contributing to increased costs and allow you to take appropriate actions to optimize network usage.

6.2 Utilizing VPC Peering

VPC peering is a feature that allows you to connect VPC networks within the same project or between different projects. By utilizing VPC peering, you can establish private connections between your resources, reducing the need for data to traverse the public internet. This can help minimize egress costs and improve network performance, resulting in cost savings and enhanced user experience.

6.3 Minimizing Egress Costs

Egress costs, which are incurred when data leaves the Google Cloud network, can be a significant expense. To minimize these costs, consider optimizing your data flows by keeping data transfers within the same region or zone whenever possible. By minimizing the distance data needs to travel and leveraging internal networking options, you can reduce egress costs and optimize your networking expenses.

6.4 Leveraging CDN Services

Content Delivery Network (CDN) services, such as Google Cloud CDN, can help optimize networking costs by caching and delivering content closer to end-users. By leveraging CDN services, you can reduce the amount of data transferred over long distances, resulting in lower egress costs. Additionally, CDN services can improve the performance of content delivery, leading to a better user experience.

7. Database Optimization

Databases often play a critical role in cloud environments, and optimizing their usage can lead to significant cost savings. By right-sizing database instances, utilizing automatic storage increase, implementing read replicas, and choosing the right database engine, you can effectively optimize your database costs.

7.1 Right-sizing Database Instances

Similar to instance right-sizing, right-sizing database instances involves evaluating the resources allocated to your databases. By analyzing the usage patterns and performance metrics of your databases, you can determine if the current database instances are appropriately sized. Right-sizing can help eliminate excess capacity, reduce costs, and improve database performance.

7.2 Utilizing Automatic Storage Increase

Automatic storage increase is a feature provided by some database engines, such as Google Cloud SQL. This feature automatically increases the storage capacity of your databases as needed, eliminating the need for manual intervention. By utilizing automatic storage increase, you can ensure that your databases have sufficient storage capacity, while avoiding unnecessary costs associated with over-provisioning.

7.3 Implementing Read Replicas

Read replicas are copies of the primary database that are used to handle read traffic. By implementing read replicas, you can distribute read requests across multiple database instances, reducing the load on the primary database and improving overall performance. This can be particularly beneficial for applications with high read-to-write ratios. By offloading read traffic to replicas, you can optimize resource utilization and ultimately reduce costs.

7.4 Choosing the Right Database Engine

Choosing the right database engine is crucial in ensuring optimal performance and cost-effectiveness. GCP offers a range of database engines, each designed for specific use cases and cost considerations. Consider factors such as data consistency requirements, scalability, availability, and pricing when selecting the appropriate database engine. By choosing the right database engine for your workload, you can ensure that you are optimizing costs while meeting your application’s requirements.

8. Container Optimization

Containers have gained popularity for their portability and scalability. Optimizing containers can help reduce costs by right-sizing them, utilizing managed instance groups, and implementing Kubernetes horizontal pod autoscaling.

8.1 Right-sizing Containers

Similar to instance and database right-sizing, it’s important to evaluate the resource allocation of your containers and ensure they are appropriately sized. By analyzing the resource usage of containers, you can identify any over-provisioned or underutilized containers and adjust resource allocation accordingly. Right-sizing containers can help optimize costs and improve overall performance.

8.2 Utilizing Managed Instance Groups

Managed instance groups can be used to manage and scale containers, providing flexibility and efficiency. By utilizing managed instance groups, you can automatically manage the underlying instances on which your containers are running, applying autoscaling policies and efficiently distributing resources. This can help optimize resource utilization and reduce costs.

8.3 Implementing Kubernetes Horizontal Pod Autoscaling

Kubernetes provides a powerful feature called horizontal pod autoscaling, which allows you to automatically scale the number of container replicas based on resource utilization. By implementing horizontal pod autoscaling, you can ensure that the number of replicas dynamically adjusts to match the workload, optimizing costs without compromising performance. This can be particularly beneficial for applications with fluctuating traffic or workload patterns.

9. Serverless Computing

Serverless computing offers a cost-effective and scalable approach for running applications without the need to manage infrastructure. By utilizing serverless options, scaling based on demand, and optimizing function execution time, you can effectively optimize costs.

9.1 Utilizing Serverless Options

GCP offers various serverless options, such as Cloud Functions and Cloud Run, which allow you to run applications without provisioning or managing underlying infrastructure. By utilizing serverless options, you can eliminate the costs associated with idle resources and pay only for the actual execution time of your functions or containers.

9.2 Scaling Based on Demand

One of the key advantages of serverless computing is the ability to scale based on demand. Serverless options automatically scale resources up or down to match the workload. By scaling resources dynamically, you can ensure that you are only paying for the resources you need, resulting in cost optimization.

9.3 Optimizing Function Execution Time

Serverless computing typically charges based on function execution time. Therefore, optimizing the execution time of your functions can help reduce costs. This can be achieved by optimizing code efficiency, reducing unnecessary dependencies or external calls, and leveraging caching mechanisms. By optimizing function execution time, you can minimize costs while maintaining the required functionality.

10. Billing and Cost Management

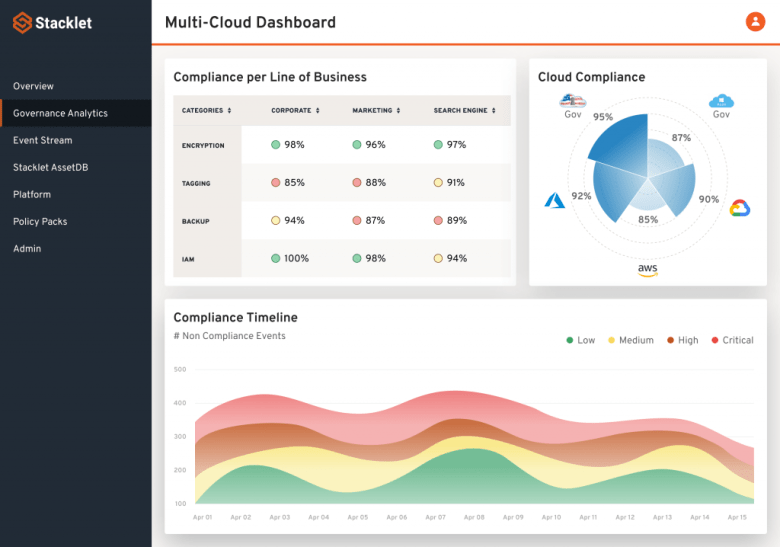

Proactively monitoring, analyzing, and controlling your cloud expenses are essential for effective cost management. By implementing monitoring and analytical tools, budgeting and forecasting, and enabling cost controls and alerts, you can gain better visibility into your costs and make informed decisions to optimize spending.

10.1 Monitoring and Analyzing Billing

Monitoring and analyzing your billing data is crucial to gain insights into your spending patterns and identify areas for optimization. GCP provides tools, such as Cloud Billing reports and Budgets and alerts, which allow you to monitor your spending and analyze it based on different dimensions such as projects, services, or regions. By regularly reviewing your billing data, you can identify any cost anomalies, track your cost-saving initiatives, and make informed decisions.

10.2 Budgeting and Forecasting

Setting budgets and forecasting your cloud costs can help you proactively manage your expenses. GCP provides budgeting tools that allow you to set spending limits for specific projects or services. These tools can also provide forecasting capabilities based on historical data, helping you anticipate future costs and plan accordingly. By setting budgets and forecasting, you can avoid unexpected expenses and ensure better cost management.

10.3 Enabling Cost Controls and Alerts

Enabling cost controls and alerts is essential to ensure that you stay within your budget and avoid any surprise bills. GCP provides features such as Budget and alert notifications, Quotas and limits, and Cloud Identity and Access Management (IAM) roles that allow you to set fine-grained controls on spending, set alerts for specific spending thresholds, and manage user access and permissions. By leveraging these features, you can proactively manage costs, detect any cost deviations, and take appropriate actions to optimize spending.

In conclusion, optimizing costs in Google Cloud Platform (GCP) requires a comprehensive approach that encompasses various aspects of resource allocation, usage scheduling, storage selection, network optimization, and database and container management. By implementing the cost optimization strategies outlined above, you can effectively reduce your cloud expenses while ensuring optimal performance and resource utilization. Regularly reviewing and analyzing your spending, setting budgets and alerts, and utilizing the cost management tools provided by GCP are key to maintaining ongoing cost optimization. Ultimately, by adopting these strategies and best practices, you can achieve significant cost savings and maximize the value of your cloud investments.